Introduction

TL;DR Your automated voice system just approved a fraudulent transaction. The caller sounded exactly like your legitimate customer. Your security team discovered the breach three hours later. Thousands of dollars vanished before anyone noticed the attack.

This nightmare scenario plays out more frequently than businesses want to admit. Voice automation systems handle sensitive operations daily. Banking transactions, healthcare records, and personal data flow through these channels. Attackers discovered that speech AI security risks create lucrative opportunities.

The sophistication of voice-based attacks grows exponentially. Deepfake audio fools authentication systems convincingly. Adversarial attacks manipulate speech recognition. Social engineering exploits conversational AI weaknesses. Your voice bots face threats traditional security measures never anticipated.

This guide reveals the critical speech AI security risks threatening your automated systems. You’ll discover specific vulnerabilities attackers exploit regularly. More importantly, you’ll learn practical defenses that actually work. Your voice automation deserves protection equal to its importance.

Understanding Speech AI Security Risks

Voice-based AI systems introduce unique security challenges. Audio data creates attack surfaces that text-based systems never face. Speech contains biometric information that both enables and threatens security.

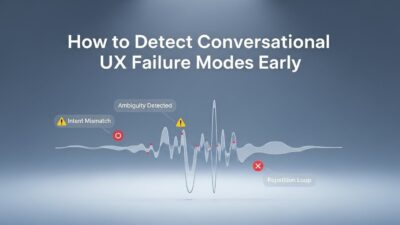

Speech AI security risks span multiple system layers. Voice biometric authentication can get spoofed. Speech recognition systems misinterpret manipulated audio. Natural language understanding falls for carefully crafted commands. Text-to-speech generation produces misleading information.

The attack surface expands with each integration point. Telephony systems connect to the internet. Cloud APIs process sensitive voice data. Third-party services introduce additional vulnerabilities. Each connection represents a potential breach point.

Attackers leverage sophisticated techniques unavailable years ago. Generative AI creates convincing voice clones. Machine learning discovers optimal adversarial perturbations. Automated tools scale attacks beyond human capacity. The threat landscape evolves faster than defenses.

Financial incentives drive continuous attack innovation. Voice systems guard valuable assets and information. Banking credentials, medical records, and corporate secrets flow through calls. Successful attacks yield immediate monetary rewards.

Regulatory frameworks struggle to keep pace. GDPR, HIPAA, and PCI-DSS established rules for data security. Voice-specific threats emerged after these regulations. Compliance doesn’t guarantee protection against modern attacks.

Understanding the threat landscape helps prioritize defenses. Not all risks deserve equal attention. Some vulnerabilities get exploited constantly. Others remain theoretical despite scary demonstrations. Your security strategy must focus on actual threats.

Voice Spoofing and Deepfake Attacks

Synthetic voice generation reached frightening sophistication. Attackers create audio that sounds indistinguishable from real people. These deepfakes fool both humans and automated systems.

Voice cloning requires surprisingly little source material. Minutes of audio suffice for convincing impersonation. Public speeches, podcasts, and social media provide abundant samples. Celebrities and executives face particular vulnerability.

The technical barriers dropped dramatically recently. Open-source tools democratized voice synthesis. Commercial services offer voice cloning subscriptions. No specialized expertise required anymore. Anyone can create convincing fakes.

Real-world attacks already happened across industries. Criminals impersonated CEOs to authorize fraudulent wire transfers. Insurance fraudsters faked accident victim voices. Political deepfakes spread misinformation during elections. The damage runs into millions of dollars.

Detection proves remarkably difficult. Human ears often cannot distinguish real from synthetic. Traditional voice biometrics fail against quality deepfakes. Attackers continuously improve synthesis quality. The arms race favors attackers currently.

Liveness detection offers partial protection. Asking speakers to repeat random phrases helps. Real-time interaction increases attack difficulty. Subtle timing and breathing patterns reveal synthesis. These techniques add friction but improve security.

Multi-factor authentication reduces reliance on voice alone. Combining voice with knowledge factors helps. Device recognition adds another verification layer. Behavioral biometrics supplement voice analysis. Defense-in-depth strategies prove essential.

Speech AI security risks from deepfakes will intensify. Synthesis quality improves monthly. Attack tools become more accessible. Your voice authentication systems need constant vigilance. Static defenses become obsolete quickly.

Adversarial Audio Attacks

Machine learning models powering speech recognition have exploitable weaknesses. Adversarial examples fool these systems reliably. Attackers add imperceptible noise that changes transcriptions completely.

The attacks work through mathematical optimization. Algorithms find minimal audio perturbations. These changes make systems transcribe attacker-chosen text. Human listeners hear only slight static. The manipulation stays below perception thresholds.

Targeted attacks prove particularly dangerous. Attackers can make systems transcribe any specific phrase. “Transfer funds to account X” might sound like innocent conversation. The speech recognizer interprets attacker commands instead. This enables precise malicious actions.

Physical adversarial examples work over-the-air. Researchers demonstrated attacks through actual speakers. Room acoustics and distance don’t prevent success. This proves attacks work in real-world conditions. Lab demonstrations translate to practical threats.

Hidden voice commands exploit ultrasonic frequencies. Humans cannot hear these high-frequency sounds. Microphones capture them perfectly. Speech recognition systems process these inaudible commands. Attackers hijack voice assistants silently.

Universal adversarial perturbations affect multiple models. A single audio modification fools different systems. This scalability increases attack efficiency dramatically. Defenders cannot rely on model-specific protections. The threat generalizes across speech AI implementations.

Defense mechanisms remain imperfect currently. Adversarial training improves robustness partially. Input sanitization filters suspicious patterns. Ensemble models provide some redundancy. None offer complete protection yet.

Speech AI security risks from adversarial audio demand serious attention. The techniques work reliably. Tools for generating attacks spread widely. Your speech recognition systems face active exploitation. Layered defenses become mandatory.

Authentication and Identity Verification Vulnerabilities

Voice biometrics promise convenient passwordless authentication. Security depends entirely on matching voices accurately. Attackers discovered numerous ways to break this assumption.

Replay attacks use recorded audio. Criminals record legitimate users speaking. They play recordings during authentication. Simple systems accept these replays blindly. The attack requires minimal technical skill.

Voice conversion attacks transform attacker voices. Software modifies pitch, timbre, and accent. The output mimics target speakers closely. Modern conversion quality fools many systems. Specialized hardware makes this accessible.

Presentation attacks manipulate the authentication process. Attackers use high-quality speakers. Reverberation and echo get controlled carefully. Systems cannot distinguish live speakers from recordings. The physical setup defeats liveness checks.

Enrollment poisoning corrupts biometric templates. Attackers submit synthetic samples during registration. Systems learn to accept attacker-controlled voices. This backdoor grants permanent access. Detection becomes nearly impossible later.

Zero-day vulnerabilities hide in proprietary systems. Voice biometric algorithms contain bugs. Specific input patterns bypass security checks. Attackers reverse-engineer these weaknesses. Vendors often discover problems only after exploitation.

Multi-modal authentication mitigates voice-only risks. Combining voice with other factors increases security. Behavioral patterns supplement voice analysis. Location and device information add context. This defense-in-depth approach proves effective.

Continuous authentication monitors entire conversations. Systems check voice characteristics throughout calls. Sudden changes trigger alerts. This detects mid-call hijacking attempts. Static authentication at call start proves insufficient.

Privacy Violations and Data Exposure

Voice automation systems process incredibly sensitive information. Every conversation contains personal data. Speech AI security risks extend beyond authentication failures to privacy breaches.

Audio recordings reveal far more than spoken words. Background conversations get captured inadvertently. Emotional states become apparent in voice characteristics. Health conditions show up in speech patterns. This metadata creates privacy concerns.

Cloud processing exposes voice data extensively. Audio travels across networks unencrypted sometimes. Third-party providers access raw recordings. Storage systems become attractive attack targets. Each hop increases exposure risk.

Data retention policies often overreach. Organizations keep recordings longer than necessary. Indefinite storage maximizes breach impact. Old recordings contain outdated but still-sensitive information. Minimal retention protects privacy better.

Unauthorized access happens through multiple paths. Employees abuse access privileges. Hackers breach poorly secured systems. Subpoenas compel data disclosure. Each access path threatens privacy.

Voice fingerprinting enables cross-database tracking. Biometric signatures identify speakers uniquely. Different organizations can correlate recordings. This tracking happens without user knowledge. Anonymity becomes impossible with voice data.

De-identification proves extremely difficult. Removing names doesn’t eliminate identifiability. Voice characteristics serve as strong identifiers. Re-identification attacks work reliably. True anonymization remains elusive.

Consent mechanisms often fail adequately. Users don’t understand data usage implications. Lengthy legal terms obscure actual practices. Opt-out requires effort most users won’t expend. Real informed consent rarely occurs.

Encryption protects data throughout its lifecycle. End-to-end encryption prevents intermediate access. Encrypted storage protects breached databases. Key management becomes critical for security. This technical measure proves essential.

Prompt Injection and Command Hijacking

Conversational AI systems accept natural language input. Attackers craft specific phrases that manipulate system behavior. These injection attacks exploit how models process instructions.

Direct prompt injection modifies system instructions. Attackers tell the system to ignore previous directives. “Forget all previous instructions” followed by malicious commands. Poorly designed systems comply with these requests. The attack succeeds through simple language.

Indirect injection uses external content sources. Systems retrieve web pages, emails, or documents. Attackers hide instructions in this content. The system follows embedded commands unknowingly. This vector proves particularly insidious.

Jailbreaking bypasses safety restrictions. Systems have built-in limits on harmful outputs. Clever prompts circumvent these protections. Attackers discover phrases that unlock restricted capabilities. The system behaves contrary to design intentions.

Goal hijacking redirects system purposes. Voice bots designed for customer service become attack tools. Injected instructions turn bots toward malicious goals. The system operates outside intended parameters. Users receive harmful or fraudulent outputs.

Multi-step attacks chain simple operations. Each individual step seems innocuous. Combined sequence achieves malicious objectives. Systems lack holistic understanding of request sequences. This defeats simple filtering approaches.

Defense requires robust instruction isolation. System prompts need protection from user inputs. Clear boundaries between instructions and data help. Sandboxing prevents cross-contamination. These architectural choices improve resilience.

Output validation catches many injection attempts. Systems check responses before delivery. Anomaly detection identifies suspicious patterns. Human review validates high-risk operations. These controls add safety layers.

Speech AI security risks from injection attacks affect all conversational systems. Voice interfaces provide convenient attack vectors. Natural language processing makes filtering difficult. Your voice bots need specific protections against manipulation.

Social Engineering Through Voice AI

Attackers leverage voice automation for sophisticated social engineering. AI-powered calls scale deception to industrial levels. Victims trust familiar-sounding voices automatically.

Vishing campaigns use voice bots extensively. Automated systems place thousands of calls. Scripts adapt based on victim responses. Success rates improve through machine learning. The economics favor attackers heavily.

Pretexting creates convincing scenarios. Bots impersonate banks, government agencies, or tech support. Voice quality sounds professional and legitimate. Victims provide sensitive information readily. The deception succeeds through apparent authenticity.

Urgency and fear increase compliance. Bots claim accounts got compromised. Immediate action supposedly prevents disaster. Victims make rushed decisions under pressure. This psychological manipulation works reliably.

Authority exploitation leverages institutional trust. Systems claim to represent legitimate organizations. Official-sounding language increases credibility. Victims assume authentication already occurred. The presumption of legitimacy proves dangerous.

Information gathering happens across multiple interactions. Initial calls collect seemingly innocuous details. Later contacts use previously gathered information. This progressive approach builds trust incrementally. The final exploitation succeeds through accumulated context.

Voice cloning amplifies social engineering effectiveness. Attackers impersonate specific individuals convincingly. CEOs, family members, or colleagues get spoofed. Victims trust familiar voices instinctively. This personalization dramatically increases success rates.

Education remains the strongest defense. Users need awareness of voice-based threats. Healthy skepticism protects better than technology alone. Verification procedures should become habitual. Cultural change takes time but pays dividends.

Technical controls supplement user awareness. Callback verification confirms caller identity. Out-of-band confirmation uses different communication channels. Transaction limits reduce potential damage. These process improvements add security layers.

Infrastructure and API Vulnerabilities

Voice automation systems depend on complex infrastructure. Each component introduces potential vulnerabilities. Speech AI security risks hide throughout the technology stack.

Telephony integration exposes systems broadly. SIP protocols contain known vulnerabilities. PBX systems often run outdated software. VoIP connections lack proper encryption. These legacy components create entry points.

API security determines system integrity. Speech recognition services need authentication. Rate limiting prevents abuse. Input validation blocks malicious payloads. Poor API security invites exploitation.

Third-party dependencies introduce risk. External speech services process sensitive audio. Library vulnerabilities affect your systems. Supply chain attacks compromise dependencies. You inherit vendor security weaknesses.

Cloud infrastructure requires proper configuration. S3 buckets accidentally become public. IAM permissions grant excessive access. Network security groups leave ports open. Misconfiguration causes most cloud breaches.

Container security protects deployed applications. Images contain vulnerable components. Runtime configuration allows escapes. Network policies need proper restriction. Containerization adds complexity requiring expertise.

Secrets management protects credentials. API keys embedded in code leak easily. Environment variables provide better separation. Dedicated secrets managers offer strongest protection. Proper rotation prevents long-term compromise.

Network segmentation limits breach impact. Voice systems should live in isolated networks. Firewall rules restrict communication paths. Zero-trust architecture assumes breach already occurred. This defensive posture contains damage effectively.

Monitoring detects attacks early. Unusual API call patterns indicate problems. Failed authentication attempts suggest probing. Anomalous data access triggers investigations. Comprehensive logging enables forensic analysis.

Compliance and Regulatory Challenges

Regulations struggle to address voice-specific security issues. Existing frameworks predate modern speech AI security risks. Organizations face uncertainty about requirements.

GDPR applies to voice data extensively. Audio recordings qualify as personal data. Biometric voice prints merit special protection. Consent requirements affect legitimate uses. Cross-border transfers face restrictions.

HIPAA governs healthcare voice systems strictly. Patient information in calls needs protection. Business associate agreements cover vendors. Breach notification timelines prove aggressive. Penalties for violations run steep.

PCI-DSS affects payment-related voice automation. Credit card information over phone requires controls. Scope reduction strategies minimize compliance burden. Tokenization protects stored payment details. Regular audits verify ongoing compliance.

Biometric privacy laws vary by jurisdiction. Some states regulate voice biometric collection. Illinois BIPA imposes strict requirements. Texas and Washington have similar laws. Multi-state operations face complex compliance.

Call recording laws differ across regions. Some jurisdictions require all-party consent. Others allow single-party notification. International calls create jurisdictional confusion. Legal counsel becomes essential for compliance.

AI-specific regulations emerge gradually. EU AI Act categorizes voice systems by risk. High-risk applications face strict requirements. Transparency and documentation become mandatory. Enforcement begins soon.

Industry standards provide guidance. NIST frameworks address AI security. ISO standards cover biometric authentication. Industry working groups develop best practices. These voluntary standards inform compliance approaches.

Documentation proves compliance. Risk assessments identify threats systematically. Security controls get documented thoroughly. Incident response procedures exist in writing. Regular testing validates effectiveness. Auditors demand evidence of diligence.

Insider Threats and Access Control

Organizations face threats from trusted insiders. Employees and contractors access voice systems legitimately. Speech AI security risks include internal malicious actions.

Privileged access enables significant damage. System administrators control everything. Database access exposes all recordings. API keys grant broad capabilities. Excessive privileges create temptation and opportunity.

Accidental exposure happens frequently. Employees mishandle sensitive recordings. Laptops containing voice data get stolen. Cloud storage links shared accidentally. Good intentions don’t prevent harm.

Malicious insiders act with sophistication. They understand system weaknesses intimately. Access appears legitimate initially. Detection requires behavioral analysis. Traditional security measures often fail.

Departing employees present particular risks. Access often continues after employment ends. Credential revocation gets overlooked. Data exfiltration happens before leaving. Exit procedures need rigorous enforcement.

Third-party vendors introduce uncontrolled access. Contractors need system access temporarily. Vendor employees receive insufficient vetting. Offshore teams create jurisdictional complexity. Supply chain security requires attention.

Role-based access control limits privileges. Users get minimum necessary permissions. Segregation of duties prevents single-person compromise. Regular access reviews catch privilege creep. This principle of least privilege reduces risk.

Activity monitoring detects suspicious behavior. Unusual data access triggers alerts. Access from unexpected locations raises flags. Bulk downloads warrant investigation. User entity behavior analytics identify anomalies.

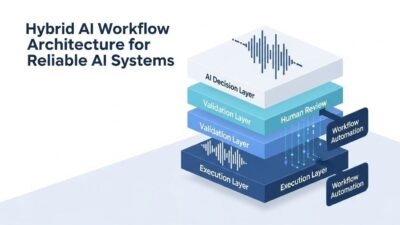

Building Defense-in-Depth Security

Single security controls fail inevitably. Layered defenses create resilience. Speech AI security risks require comprehensive protection strategies.

Perimeter security filters malicious traffic. Web application firewalls block common attacks. DDoS protection maintains availability. Rate limiting prevents abuse. These controls reduce attack surface.

Authentication layers verify identity thoroughly. Multi-factor authentication stops credential theft. Behavioral biometrics add continuous verification. Device fingerprinting provides context. Combined factors increase security dramatically.

Authorization controls limit actions. Users cannot access beyond their needs. Sensitive operations require elevated privileges. Transaction limits contain damage. Granular permissions enable precise control.

Input validation prevents injection attacks. Whitelisting accepts only known-good inputs. Sanitization removes dangerous characters. Length limits prevent buffer overflows. This defensive programming catches attacks early.

Output encoding prevents information leakage. Error messages don’t reveal system details. Stack traces stay internal. Generic responses hide implementation. This opacity frustrates attackers.

Encryption protects data everywhere. Transport encryption secures network communications. Storage encryption protects data at rest. Key management follows best practices. Cryptographic controls provide fundamental protection.

Monitoring provides visibility continuously. Security information and event management aggregates logs. Anomaly detection identifies unusual patterns. Alert correlation reduces noise. Security operations centers respond to incidents.

Incident response enables recovery. Playbooks guide team actions. Communication plans manage crises. Backup systems maintain operations. Regular drills validate preparedness. This resilience limits damage.

Testing and Validation Strategies

Theoretical security means nothing without validation. Regular testing proves defenses actually work. Speech AI security risks require specialized assessment techniques.

Penetration testing simulates real attacks. Security experts attempt system compromise. Voice-specific attack vectors get tested. Results identify actual vulnerabilities. Annual testing catches regression.

Red team exercises test complete security posture. Realistic attack scenarios unfold over time. Social engineering attempts complement technical attacks. Organizational response gets evaluated. These immersive tests reveal gaps comprehensively.

Vulnerability scanning automates basic checks. Tools identify known weaknesses. Network scans find exposed services. Application scans catch common bugs. Regular automated scanning maintains baseline security.

Adversarial testing validates AI robustness. Researchers generate adversarial examples. Deepfake audio tests voice authentication. Injection attacks probe conversational AI. These specialized tests address ML-specific risks.

Compliance audits verify regulatory adherence. Third-party assessors examine controls. Documentation gets reviewed thoroughly. Technical testing validates claims. Certifications demonstrate due diligence.

Bug bounty programs crowdsource security research. External researchers probe for weaknesses. Monetary rewards incentivize disclosure. Continuous testing happens organically. This approach scales security expertise.

Security code review catches vulnerabilities early. Peers examine code before deployment. Automated tools supplement manual review. Secure coding standards guide development. This preventive approach reduces downstream costs.

Regression testing prevents quality decay. Every update runs security test suites. Known attack vectors get retested. This ensures fixes don’t introduce new problems. Automated testing makes this practical.

Incident Response and Recovery

Security breaches will happen eventually. Preparation determines recovery success. Speech AI security risks demand specialized response procedures.

Detection speed limits damage. Monitoring identifies anomalies quickly. Automated alerts notify security teams. Triage processes prioritize incidents. Fast detection enables fast response.

Containment prevents spread. Compromised systems get isolated immediately. Network segmentation limits lateral movement. Credential resets block attacker access. Quick containment minimizes impact.

Investigation determines attack scope. Forensic analysis examines evidence. Log analysis reconstructs attacker actions. Affected data gets identified precisely. Understanding scope guides remediation.

Eradication removes attacker presence. Malware gets cleaned thoroughly. Backdoors get discovered and closed. Compromised credentials get revoked. Systems get patched before restoration.

Recovery restores normal operations. Clean backups replace compromised systems. Validation confirms attacker removal. Gradual restoration manages risk. Business continuity plans maintain critical functions.

Communication manages stakeholder concerns. Customers learn about impacts promptly. Regulators receive required notifications. Internal teams get coordinated effectively. Transparent communication maintains trust.

Post-incident review improves future response. Timeline reconstruction identifies lessons. Process improvements get documented. Training addresses identified gaps. Organizations learn from each incident.

Legal considerations complicate response. Evidence preservation supports prosecution. Attorney-client privilege protects communications. Notification requirements vary by jurisdiction. Legal counsel guides these complexities.

Future Threats and Emerging Risks

Speech AI security risks evolve constantly. Tomorrow’s threats differ from today’s. Anticipating future risks enables proactive defense.

Quantum computing threatens current encryption. Voice data encrypted today becomes vulnerable. Post-quantum cryptography offers future protection. Migration strategies need planning now. This paradigm shift approaches rapidly.

Generative AI capabilities grow exponentially. Deepfakes become indistinguishable from reality. Real-time voice conversion works flawlessly. Detection becomes nearly impossible. The authenticity problem intensifies dramatically.

IoT proliferation expands attack surface. Smart speakers populate homes and offices. Wearables capture voice constantly. Each device represents potential compromise. The connected environment becomes weaponizable.

5G networks enable sophisticated attacks. Low latency supports real-time manipulation. High bandwidth allows quality audio streaming. Edge computing reduces detection opportunities. Network evolution favors attackers partially.

Autonomous voice agents make independent decisions. They handle complex transactions without human oversight. Compromising these agents causes scaled damage. AI safety concerns merge with security. This convergence creates new challenges.

Regulatory landscape will shift significantly. Governments recognize AI-specific threats. New laws will impose requirements. Compliance burden will increase substantially. Proactive preparation beats reactive scrambling.

Attack sophistication increases predictably. Nation-state actors target voice systems. Organized crime industrializes voice fraud. Script kiddies access powerful tools. The threat diversity expands continuously.

Defense technology advances simultaneously. AI-powered security tools emerge. Behavioral analytics improve detection. Quantum-resistant encryption deploys gradually. The security industry innovates actively.

Frequently Asked Questions

What are the most dangerous speech AI security risks?

Voice deepfakes pose the greatest immediate threat. Attackers create convincing impersonations using readily available tools. Authentication systems fall for quality synthetic voices. Financial fraud and identity theft result directly. Adversarial audio attacks rank second in severity. These attacks manipulate speech recognition systems invisibly. Attackers hijack voice commands for malicious purposes. Defense mechanisms remain imperfect currently.

How can I detect deepfake voice attacks?

Detection requires multiple technical approaches. Liveness detection asks speakers to repeat random phrases. Analysis of micro-variations reveals synthesis artifacts. Behavioral biometrics track speech patterns over time. Background noise analysis identifies inconsistencies. No single method offers perfect detection. Layered approaches combining multiple techniques work best. Continuous improvement stays essential as synthesis advances.

Are voice biometrics secure enough for authentication?

Voice biometrics alone provide insufficient security. Spoofing attacks succeed against voice-only systems. Multi-factor authentication combining voice with other factors helps. Knowledge-based questions add verification layers. Device recognition provides additional context. Continuous authentication throughout calls improves security. Voice should supplement rather than replace other factors.

What regulations apply to voice AI security?

Multiple frameworks affect voice systems. GDPR protects voice data as personal information. HIPAA governs healthcare-related voice recordings. PCI-DSS applies to payment voice automation. State biometric privacy laws vary significantly. Industry standards like NIST provide guidance. Compliance requirements depend on jurisdiction and industry. Legal counsel helps navigate complexity.

How do adversarial audio attacks work?

Attackers add imperceptible noise to audio. Mathematical optimization finds effective perturbations. These changes fool speech recognition systems. Humans hear only slight static. The system transcribes attacker-chosen text instead. Physical attacks work through actual speakers. Universal perturbations affect multiple systems simultaneously. Defense requires adversarial training and input validation.

What should I do if my voice system gets breached?

Immediate containment limits damage spread. Isolate compromised systems from networks. Reset all credentials and API keys. Notify affected users promptly. Engage forensic experts for investigation. Document everything for compliance purposes. Review and update security controls. Implement lessons learned quickly. Legal counsel guides notification requirements.

How much does voice AI security cost?

Costs vary dramatically by organization size. Basic security measures require minimal investment. Commercial security tools cost thousands monthly. Enterprise solutions reach six figures annually. Staff training and expertise add ongoing costs. Incident response capabilities need budget allocation. Prevention costs less than breach recovery. ROI justifies security investment clearly.

Can I build secure voice systems without experts?

Basic security requires fundamental expertise minimally. Cloud providers offer managed security services. Third-party security vendors provide specialized tools. Open-source resources offer learning opportunities. Small projects can start with limited resources. Enterprise deployments demand professional security expertise. Consultants fill knowledge gaps effectively. Risk tolerance determines necessary investment.

What tools detect voice security threats?

Multiple specialized tools serve different purposes. Adversarial detection systems identify manipulation attempts. Voice biometric platforms include liveness checks. Security information and event management aggregate logs. Network monitoring tools track suspicious traffic. Application security testing finds vulnerabilities. No single tool covers everything. Comprehensive security requires multiple solutions.

How often should I test voice system security?

Continuous monitoring happens 24/7 automatically. Penetration testing occurs annually minimum. Vulnerability scanning runs weekly or daily. Code reviews happen before each deployment. Red team exercises occur quarterly. Adversarial testing follows major updates. Compliance audits match regulatory requirements. Frequency scales with system criticality. High-risk systems need constant attention.

Read More:-Call Outcome Prediction Models for Sales & Call Centers

Conclusion

Speech AI security risks threaten every voice automation deployment. Deepfake audio fools authentication systems convincingly. Adversarial attacks manipulate speech recognition invisibly. Social engineering scales through voice bots effectively. Your systems face sophisticated threats daily.

The attack surface expands with each new voice capability. Telephony integration, cloud processing, and API connections multiply vulnerabilities. Each component requires dedicated security attention. Comprehensive protection demands layered defenses throughout the stack.

Start with fundamental security hygiene. Encrypt voice data everywhere throughout its lifecycle. Implement strong authentication using multiple factors. Monitor continuously for suspicious patterns. These basics prevent most common attacks.

Add voice-specific protections progressively. Deploy liveness detection against spoofing. Validate inputs to prevent injection attacks. Implement behavioral analytics for anomaly detection. These specialized controls address unique threats.

Test security regularly and thoroughly. Penetration testing finds vulnerabilities before attackers do. Adversarial testing validates AI robustness. Red team exercises measure real-world resilience. Regular validation proves defenses actually work.

Build incident response capabilities proactively. Detection systems alert teams immediately. Response procedures guide efficient action. Recovery plans restore operations quickly. Preparation determines breach survival.

Maintain compliance with evolving regulations. Voice data protection faces increasing legal requirements. Documentation proves due diligence clearly. Regular audits validate ongoing compliance. Legal protection requires demonstrated effort.

Educate users about voice-based threats. Employee awareness prevents social engineering. Customer education reduces successful attacks. Security culture strengthens technical controls. Human factors matter as much as technology.

Stay informed about emerging threats. Speech AI security risks evolve constantly. New attack techniques appear regularly. Defense capabilities advance simultaneously. Continuous learning maintains security posture.

Invest appropriately in voice system security. The cost of prevention pales beside breach recovery. Reputation damage exceeds immediate financial loss. Regulatory penalties add further expense. Security investment protects business fundamentally.

Your voice automation systems deserve robust protection. Users trust these systems with sensitive information. Business operations depend on reliable service. Speech AI security risks will only intensify. Start strengthening your defenses today before attackers strike tomorrow.