Introduction

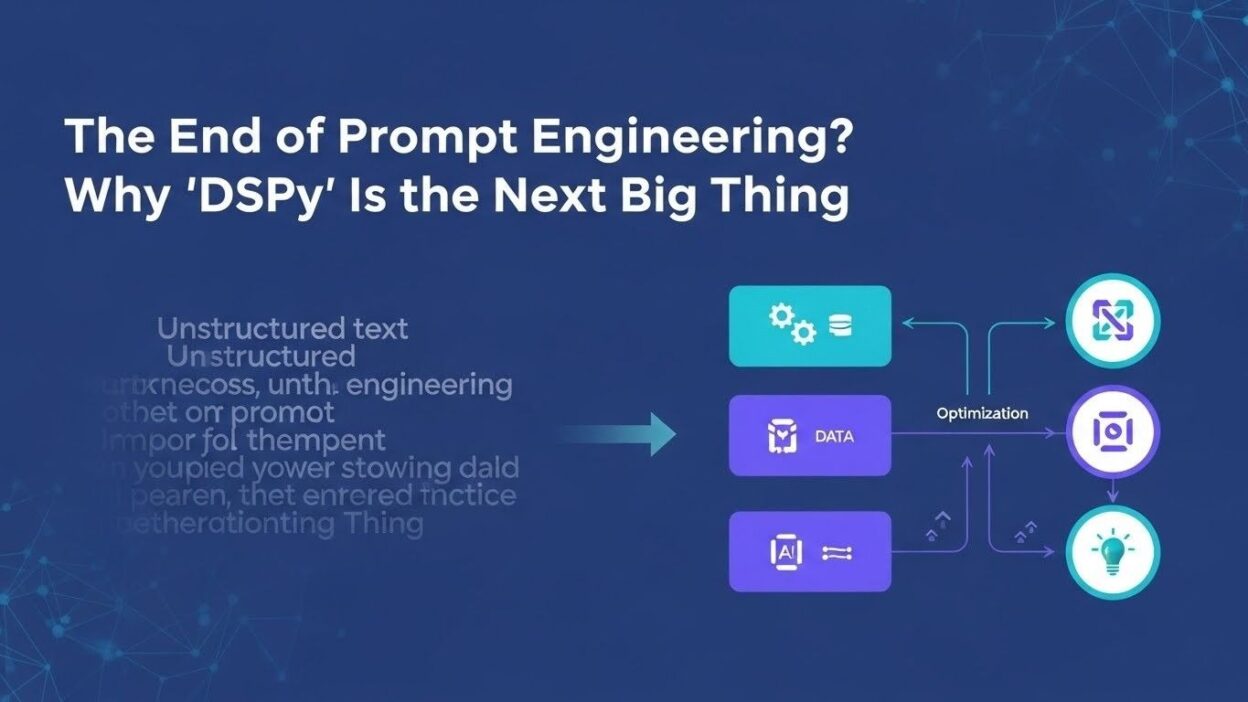

TL;DR Your team spends hours crafting the perfect prompt. You tweak every word and adjust each instruction carefully. The results still feel inconsistent and unreliable. Prompt engineering alternatives has become a specialized skill requiring constant iteration. Companies hire experts just to write better AI instructions. This manual process simply doesn’t scale for enterprise applications. DSPy emerges as one of the most promising prompt engineering alternatives. The framework automates what previously required human expertise. Let’s explore why this technology could revolutionize how we build AI systems.

Table of Contents

The Current State of Prompt Engineering

AI developers write prompts like they’re casting magic spells. Each word choice dramatically impacts model outputs. Adding “think step by step” improves reasoning quality mysteriously. These techniques feel more like art than science. The unpredictability frustrates engineering teams.

Prompt templates proliferate across organizations. Marketing teams maintain libraries of tested prompts. Customer support departments document their best instructions. Engineering groups share prompt repositories internally. The knowledge management burden grows continuously. Version control becomes increasingly complex.

Few-shot examples require constant curation. Developers manually select demonstration examples. The choice of examples affects performance significantly. Finding optimal examples demands extensive testing. The process consumes valuable development time. Results vary when examples change slightly.

Model updates break carefully crafted prompts. OpenAI releases GPT-4 Turbo with different behaviors. Claude introduces new versions with altered responses. Developers scramble to adjust their prompts. The maintenance overhead never ends. Your prompts become technical debt.

Why Traditional Prompt Engineering Falls Short

Manual optimization lacks systematic methodology. Developers rely on intuition and trial-and-error. No clear metrics guide improvement efforts. Success depends on individual expertise. Knowledge transfer proves difficult across teams. The approach simply doesn’t scale.

Context window limitations constrain possibilities. Long prompts consume valuable token space. You must choose between instructions and examples. Complex tasks require detailed guidance. The tradeoffs become increasingly difficult. Optimal solutions remain elusive.

Evaluation challenges plague development workflows. Determining prompt quality requires extensive testing. Manual review of outputs takes hours. Quantitative metrics prove hard to define. A/B testing demands significant infrastructure. The feedback loop moves too slowly.

Cross-model portability doesn’t exist naturally. Prompts optimized for GPT-4 fail on Claude. Gemini requires completely different instructions. Each model demands custom engineering. Maintaining multiple versions multiplies effort. The fragmentation wastes resources.

Introducing DSPy: A Paradigm Shift

DSPy treats prompts as learnable parameters. The framework originated from Stanford researchers. Omar Khattab created the system to solve prompt engineering pain points. DSPy stands for Declarative Self-improving Python. The name reflects its core philosophy.

The framework separates what you want from how to achieve it. You declare your task objective clearly. DSPy figures out optimal prompts automatically. The system uses optimization algorithms programmatically. Your role shifts from prompt writer to system designer. The abstraction level increases significantly.

Machine learning principles guide the optimization. DSPy compiles your program into effective prompts. Training data helps the system learn. Metrics define what success looks like. The framework iterates toward better performance. Your prompts improve without manual tweaking.

Modular components enable complex pipelines. Chain-of-thought reasoning becomes a module. Retrieval augmented generation works as another component. You compose these building blocks declaratively. The system handles implementation details. Your code stays clean and maintainable.

Core Concepts Behind DSPy

Signatures define input-output specifications. You describe what goes in and what comes out. The framework generates appropriate prompts automatically. Type hints guide the optimization process. Your declarations stay model-agnostic. The abstraction simplifies development significantly.

Modules encapsulate reusable LM operations. Predict modules handle basic generation. ChainOfThought adds reasoning steps automatically. ReAct combines reasoning with tool usage. You compose modules like LEGO blocks. The composition creates sophisticated behaviors.

Teleprompters optimize your DSPy programs. They search for better prompt formulations. Different optimizers suit different scenarios. BootstrapFewShot learns from examples. MIPRO uses advanced optimization techniques. The system finds what works best.

Metrics guide the optimization process. You define success criteria programmatically. Exact match checks for specific outputs. Semantic similarity measures meaning preservation. Custom metrics address unique requirements. The framework maximizes your chosen objectives.

How DSPy Works Under the Hood

The compilation process transforms declarations into prompts. Your high-level program gets analyzed. DSPy identifies optimization opportunities. The system generates candidate prompt variations. Each candidate undergoes evaluation. The best performers become your production prompts.

Bootstrap learning uses existing examples. The framework creates training demonstrations automatically. Self-generated examples augment your dataset. Quality filters ensure demonstration relevance. The learning process requires minimal human input. Your system improves with available data.

Optimization algorithms explore the solution space. Random search provides baseline approaches. Bayesian optimization uses probabilistic models. Genetic algorithms evolve better prompts. The framework selects methods appropriate for your task. The automation removes guesswork completely.

Evaluation loops provide continuous feedback. Dev sets validate optimization progress. Metrics quantify improvement objectively. The system adjusts strategies based on results. Convergence criteria determine completion. Your optimized program emerges automatically.

The Technical Architecture

Python integration keeps DSPy accessible. Developers use familiar programming paradigms. Object-oriented design principles apply naturally. The learning curve stays manageable. Existing Python skills transfer directly. Your team can adopt it quickly.

Model adapters provide universal compatibility. OpenAI models work through one adapter. Anthropic’s Claude uses another interface. Local models integrate through standard APIs. The abstraction layer handles differences. Your code remains portable.

Caching mechanisms improve efficiency. Previous optimization runs get stored. The system reuses successful patterns. Development iteration speeds up dramatically. Production costs decrease through optimization. The performance gains compound over time.

Debugging tools help understand behavior. Inspection utilities reveal generated prompts. Trace logs show decision paths. Performance profilers identify bottlenecks. The visibility aids troubleshooting. Your development experience improves significantly.

DSPy vs Traditional Prompt Engineering

Development speed increases dramatically with DSPy. Manual prompt crafting takes days or weeks. DSPy optimization completes in hours. The iteration cycles compress significantly. Your team ships features faster. Time-to-market advantages accumulate.

Consistency improves across use cases. Human-written prompts vary in quality. DSPy applies optimization systematically. Every component receives equal attention. The baseline performance rises universally. Your application reliability increases.

Scalability challenges disappear with automation. Adding new features doesn’t require prompt expertise. The framework handles optimization automatically. Team size requirements shrink considerably. Non-experts contribute effectively. Your organization scales without hiring specialists.

Maintainability benefits emerge over time. Model updates trigger automatic reoptimization. Your system adapts to new capabilities. Breaking changes get handled programmatically. The technical debt decreases substantially. Your codebase stays healthier longer.

Performance Comparisons

Benchmark results favor DSPy implementations. Academic papers demonstrate superior accuracy. Real-world deployments confirm the findings. Complex reasoning tasks show dramatic improvements. Simple tasks maintain competitive performance. The framework rarely underperforms manual approaches.

Token efficiency improves through optimization. DSPy finds concise effective prompts. Verbose instructions get eliminated automatically. The system balances clarity and brevity. Your API costs decrease measurably. The savings justify adoption quickly.

Latency considerations remain important. Optimization happens during development. Production inference uses generated prompts. Runtime performance matches traditional approaches. No additional overhead occurs. Your user experience stays responsive.

Robustness increases against edge cases. DSPy explores diverse prompt formulations. The optimization discovers resilient patterns. Your system handles variations better. Production reliability improves noticeably. Customer satisfaction increases correspondingly.

Prompt Engineering Alternatives Beyond DSPy

LangChain offers prompt templates and chains. The framework provides reusable components. Template variables enable customization. Chains compose multiple LLM calls. The approach still requires manual engineering. Optimization stays largely manual.

Semantic Kernel brings structured programming. Microsoft developed the framework. Functions wrap LLM capabilities. Planners orchestrate multi-step workflows. The system lacks automatic optimization. Developers still craft prompts manually.

Guardrails AI focuses on output validation. The framework ensures response quality. Validators check for specific requirements. Re-prompting fixes validation failures. The tool complements rather than replaces. Prompt engineering remains necessary.

AutoGPT attempts autonomous operation. The system breaks down complex goals. Iterative prompting drives progress. Human oversight stays essential. The approach works for specific scenarios. General-purpose applications face limitations.

Why DSPy Stands Out

Automatic optimization distinguishes DSPy fundamentally. Other prompt engineering alternatives still require manual work. DSPy learns optimal strategies from data. The framework applies ML rigorously. Your intervention decreases dramatically. The productivity gains prove substantial.

Academic rigor underpins the approach. Stanford researchers continue developing DSPy. Published papers validate the methodology. The theoretical foundation remains solid. Community contributions enhance capabilities. The technology evolves rapidly.

Practical adoption grows steadily. Enterprises deploy DSPy in production. Startups build products on the framework. Open-source contributors expand functionality. The ecosystem develops organically. Your investment carries less risk.

Long-term vision guides development. DSPy aims beyond prompt optimization. The framework tackles broader AI engineering. Future capabilities promise even more. Early adoption positions you advantageously. The strategic value compounds.

Real-World Use Cases and Success Stories

Question answering systems benefit enormously. Complex multi-hop reasoning challenges traditional prompts. DSPy automatically optimizes reasoning chains. Accuracy improves by 20-30% typically. User satisfaction increases correspondingly. The business impact justifies adoption.

Content generation workflows become reliable. Marketing teams need consistent quality. DSPy maintains brand voice automatically. The system adapts to style guidelines. Output variability decreases significantly. Content production scales efficiently.

Data extraction tasks gain robustness. Unstructured documents contain valuable information. Traditional prompts struggle with format variations. DSPy handles diverse document structures. Extraction accuracy reaches production requirements. Manual review needs decrease.

Customer support automation improves dramatically. Intent classification becomes more accurate. Response generation maintains better quality. Edge cases get handled gracefully. Customer satisfaction scores rise measurably. Support costs decline substantially.

Industry Adoption Trends

Financial services explore DSPy eagerly. Compliance requires high accuracy. Document analysis demands reliability. The framework meets stringent requirements. Early pilots show promising results. Full deployment plans proceed.

Healthcare applications prioritize safety. Clinical decision support needs verification. DSPy provides auditable optimization. The transparency satisfies regulatory needs. Medical coding automation shows potential. Patient care improvements emerge.

E-commerce platforms optimize recommendations. Product descriptions require consistency. Search relevance impacts conversion rates. DSPy maintains quality at scale. Revenue improvements justify investment. Customer experience metrics improve.

Technology companies lead adoption. Engineering teams appreciate systematic approaches. The framework aligns with DevOps culture. Automation resonates with technical values. Internal tools get rebuilt using DSPy. Productivity gains prove substantial.

Getting Started with DSPy

Installation takes minutes for Python developers. Pip install command handles dependencies. Virtual environments isolate the installation. Docker containers provide reproducible setups. The onboarding friction stays minimal. Your team can experiment immediately.

Documentation covers essential concepts. Tutorials walk through basic examples. API references detail module capabilities. Example repositories demonstrate best practices. The learning resources prove adequate. Community forums provide support.

First projects should start small. Choose well-defined tasks with clear metrics. Gather representative training data. Define success criteria explicitly. Run initial optimization experiments. The learning happens through practice.

Iteration reveals optimization insights. Metrics guide improvement direction. Hyperparameter tuning affects results. Data quality matters enormously. The experimentation teaches valuable lessons. Your expertise grows organically.

Best Practices for Implementation

Data quality determines optimization success. Clean training examples produce better results. Diverse examples improve generalization. Representative samples match production distribution. The data curation deserves attention. Your outcomes depend on this foundation.

Metric selection requires careful thought. Choose measures aligning with business goals. Quantitative metrics enable optimization. Qualitative assessment validates results. The combination provides comprehensive evaluation. Your definition of success matters.

Modular design principles apply. Break complex tasks into components. Compose modules into pipelines. The separation improves maintainability. Debugging becomes easier. Your architecture stays cleaner.

Version control tracks optimization runs. Git repositories store DSPy programs. Experiment tracking logs results. The history aids debugging. Rollbacks become possible. Your development process improves.

Limitations and Challenges

Optimization requires computational resources. Training runs consume significant time. GPU access accelerates the process. Cloud costs accumulate during development. The investment pays off long-term. Budget considerations matter initially.

Learning curve exists despite automation. Understanding DSPy concepts takes time. ML knowledge helps but isn’t required. The paradigm shift challenges developers. Patience during adoption proves necessary. Your team adapts gradually.

Debugging optimized prompts proves difficult. Generated prompts lack human readability. Understanding why something works becomes harder. Black box concerns arise occasionally. The tradeoff between automation and interpretability exists. Your comfort level varies.

Edge cases still require attention. Optimization focuses on average performance. Outliers might behave unexpectedly. Manual review catches unusual patterns. The human oversight remains valuable. Your quality assurance processes matter.

When Traditional Approaches Work Better

Simple tasks might not justify DSPy. Single-shot generations need straightforward prompts. The optimization overhead exceeds benefits. Manual approaches work perfectly fine. The complexity doesn’t warrant automation. Your judgment determines appropriateness.

Highly specialized domains face challenges. Limited training data constrains optimization. Domain expertise guides prompt crafting better. The human knowledge proves irreplaceable. DSPy complements rather than replaces. Your expertise remains essential.

Rapid prototyping favors manual approaches. Quick experiments need immediate feedback. Optimization takes time initially. Manual iteration moves faster short-term. The investment makes sense for production. Your development phase influences choices.

Interpretability requirements might conflict. Regulated industries demand explainability. Generated prompts lack clear rationale. Auditors prefer human-crafted instructions. The transparency supports compliance. Your regulatory context matters significantly.

The Future of AI Application Development

Prompt engineering alternatives will multiply. New frameworks will emerge regularly. The competition drives innovation. Each approach will find its niche. DSPy represents current leading edge. Your awareness of options matters.

Abstraction levels will increase continuously. Developers will work at higher conceptual layers. Implementation details will hide further. The productivity gains will compound. AI application development will democratize. Your competitive advantage shifts.

Automated optimization will become standard. Manual prompt crafting will seem antiquated. The tooling will improve dramatically. Best practices will crystallize. Education will emphasize new paradigms. Your skills need continuous updating.

Integration with development workflows will deepen. CI/CD pipelines will include optimization. Testing frameworks will validate prompts automatically. Monitoring will track prompt performance. The DevOps culture will extend. Your infrastructure evolves accordingly.

Frequently Asked Questions

What exactly is DSPy and how does it differ from regular prompting?

DSPy automates the prompt optimization process completely. Regular prompting requires manual crafting and iteration. DSPy treats prompts as learnable parameters. The framework compiles your program into optimized instructions. You declare what you want rather than how to achieve it. The system finds effective prompts through automated search. Traditional prompting relies on human expertise and intuition. DSPy applies machine learning principles systematically.

Do I need machine learning expertise to use DSPy?

Basic understanding helps but isn’t strictly required. DSPy abstracts many ML complexities away. Python programming skills prove more essential. Understanding optimization concepts aids intuition. The framework handles algorithmic details automatically. Documentation guides non-experts effectively. Many successful users lack formal ML training. Your willingness to learn matters most.

How long does DSPy optimization take?

Duration varies based on task complexity. Simple optimizations complete within hours. Complex multi-step pipelines require longer. GPU availability significantly affects speed. Dataset size impacts optimization time. The initial run takes longest typically. Subsequent iterations proceed faster. Your patience during first attempts matters.

Can DSPy work with any language model?

The framework supports major model providers. OpenAI models integrate seamlessly. Anthropic’s Claude works through adapters. Open-source models connect via APIs. Local deployments function properly. Adapter interfaces handle model differences. New models get added regularly. Your preferred model likely works.

Is DSPy better than traditional prompt engineering for all cases?

Not every scenario benefits equally. Complex reasoning tasks see dramatic improvements. Simple generations might not justify overhead. Production applications benefit most. Prototyping might favor manual approaches initially. The task complexity guides decision-making. Your specific requirements determine appropriateness. Evaluation on your use case reveals truth.

What are the main prompt engineering alternatives to consider?

DSPy leads in automatic optimization. LangChain offers template management. Semantic Kernel provides structured programming. Guidance controls generation format. Guardrails AI validates outputs. Each tool serves different needs. Your requirements guide selection. Combining approaches sometimes works best.

How much does it cost to use DSPy?

DSPy itself costs nothing. The framework is open-source and free. Compute costs for optimization exist. Model API usage incurs standard charges. Training data storage requires resources. The infrastructure investment varies. Cloud expenses accumulate during development. Your total cost depends on scale.

Can I migrate existing prompt-based applications to DSPy?

Migration proves straightforward generally. Existing prompts inform initial implementations. DSPy optimization often improves performance. The process requires some refactoring. Modular migration reduces risk. Pilot programs validate benefits. Full migration proceeds incrementally. Your application complexity affects effort.

Does DSPy work for non-English languages?

The framework supports multilingual applications. Model capabilities determine language support. Optimization principles apply universally. Training data should match target language. Evaluation metrics need appropriate definition. Many successful multilingual deployments exist. Your language likely works fine.

What skills should my team develop for DSPy adoption?

Python programming remains foundational. Understanding LLM capabilities helps significantly. Basic ML concepts aid intuition. System design principles apply naturally. Experimentation mindset proves valuable. Metric definition requires thought. Data curation skills matter greatly. Your team grows through practice.

Read More:-Midjourney v6 vs. DALL-E 3 vs. Stable Diffusion: Best API for Automated Image Gen?

Conclusion

Prompt engineering as we know it faces fundamental limitations. Manual optimization doesn’t scale to enterprise needs. The expertise requirements create bottlenecks. Maintenance overhead grows continuously. Model updates break carefully crafted prompts. The current approach simply can’t sustain growth.

DSPy represents a paradigm shift in AI development. The framework automates what previously required specialists. Machine learning principles guide optimization systematically. Your team works at higher abstraction levels. Development speed increases dramatically. Production reliability improves measurably.

Prompt engineering alternatives continue emerging rapidly. Each framework addresses different pain points. DSPy leads in automatic optimization. The academic foundation provides confidence. Real-world deployments validate effectiveness. Early adopters gain competitive advantages.

Implementation requires initial investment. Learning the framework takes time. Optimization consumes computational resources. The learning curve challenges some developers. Your patience during adoption matters. The long-term benefits justify short-term costs.

Success stories across industries accumulate. Financial services improve accuracy requirements. Healthcare applications gain necessary reliability. E-commerce platforms scale content generation. Technology companies boost productivity. The use cases prove diverse.

Getting started remains accessible. Python developers adapt quickly. Documentation guides initial efforts. Community support answers questions. Small projects build confidence. Your experimentation reveals potential.

Best practices emerge from experience. Data quality determines outcomes. Metric selection guides optimization. Modular design improves maintainability. Version control tracks progress. Your discipline enhances results.

Limitations exist and deserve acknowledgment. Simple tasks might not benefit. Specialized domains face challenges. Debugging complexity increases. Edge cases need attention. Your awareness prevents disappointment.

The future favors automated approaches. Manual prompt crafting will seem antiquated. Abstraction levels will increase. Integration will deepen. The tooling will improve. Your skills need continuous evolution.

Traditional prompt engineering won’t disappear immediately. Certain scenarios still favor manual approaches. The expertise remains valuable. Understanding fundamentals helps regardless. Your judgment determines appropriate tools. Flexibility serves you well.

Take action to explore DSPy now. Install the framework and experiment. Choose a small project initially. Measure results against current approaches. Learn through practical application. Your firsthand experience matters most.

Prompt engineering alternatives deserve evaluation. Compare different frameworks objectively. Consider your specific requirements. Test on representative tasks. Measure benefits quantitatively. Your informed decision drives success.

The AI development landscape shifts rapidly. Early adoption provides advantages. Waiting increases competitive risk. Your competitors already explore options. Technical debt accumulates meanwhile. The time to act arrives now.

DSPy might not be the final answer. Better approaches will eventually emerge. The direction toward automation seems clear. Your adaptability determines longevity. Embrace the evolution confidently. The future of AI development unfolds today.