Introduction

TL;DR Your chatbot just launched last week. Users are flooding in with questions. The system responds with generic answers. Frustrated customers abandon the conversation midway. Your team scrambles to understand what went wrong.

This scenario plays out in countless organizations every day. Conversational UX failure modes sneak into systems quietly. They disrupt user experiences before anyone notices the damage. The cost runs high in lost customers and damaged brand reputation.

Detecting these failures early saves time, money, and user trust. You need proven methods to spot problems before they escalate. This guide walks you through practical strategies to identify conversational UX failure modes in real-time.

Table of Contents

Understanding Conversational UX Failure Modes

Conversational interfaces power everything from customer service bots to virtual assistants. These systems promise seamless human-computer interaction. The reality often falls short of expectations.

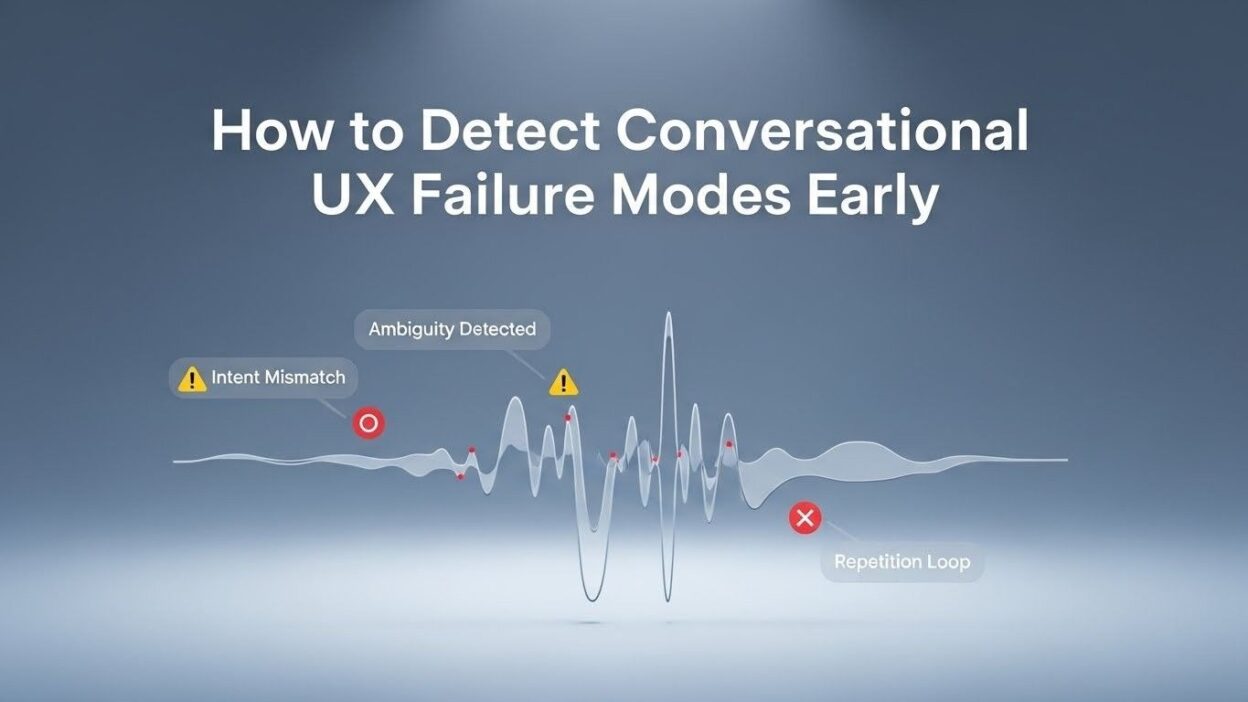

Conversational UX failure modes represent specific patterns where dialogue systems break down. The system might misunderstand user intent completely. It could provide irrelevant responses that frustrate users. Sometimes the conversation loops endlessly without resolution.

These failures differ from simple bugs or technical errors. A failure mode indicates a fundamental breakdown in communication flow. The system and user cannot establish mutual understanding.

Common triggers include ambiguous user queries. Context switching creates confusion in many dialogue systems. Multi-intent requests often overwhelm basic conversational interfaces. Edge cases expose weaknesses in training data.

The impact extends beyond individual conversations. Users lose confidence in the entire system. Support costs skyrocket as human agents intervene. Brand perception suffers when customers share negative experiences.

Understanding these patterns helps you build better detection mechanisms. You can create safeguards before users encounter problems.

The Cost of Ignoring Early Warning Signs

Many organizations dismiss early conversational UX failure modes as minor glitches. This mindset proves expensive over time. Small cracks in user experience widen into chasms.

Customer churn accelerates when conversational interfaces fail repeatedly. Users remember bad experiences more vividly than good ones. A single frustrating interaction can drive customers to competitors.

Support ticket volume increases dramatically. Human agents must handle escalations from failed bot interactions. Your team spends valuable time firefighting instead of improving the product.

Development cycles extend as teams rush to patch problems. Quick fixes often create new issues down the line. Technical debt accumulates rapidly under these conditions.

Revenue takes a direct hit from poor conversational experiences. Users abandon purchase flows when bots cannot answer simple questions. Cart abandonment rates climb steadily.

Brand reputation damage spreads through social media and reviews. Negative word-of-mouth reaches far beyond your immediate user base. Rebuilding trust requires significant investment in marketing and product improvements.

Regulatory compliance risks emerge in certain industries. Healthcare and financial services face strict communication standards. Conversational UX failure modes could expose organizations to legal liability.

The opportunity cost hurts just as much. Resources spent fixing preventable issues could drive innovation. Your team could focus on features that delight users instead.

Key Types of Conversational UX Failure Modes

Different failure patterns require different detection strategies. Recognizing these categories helps you prioritize monitoring efforts.

Intent recognition failures occur when systems misinterpret user goals. The user asks about refund policies. The bot responds with product recommendations instead. This mismatch frustrates users immediately.

Context loss represents another critical conversational UX failure mode. The system forgets previous exchanges in the conversation. Users must repeat information they already provided. This breaks the natural flow of dialogue.

Response irrelevance manifests when answers don’t match questions. The bot generates grammatically correct but contextually wrong replies. Users receive information they never requested.

Conversation loops trap users in endless cycles. The system asks the same question repeatedly. Users cannot escape to reach their goals. This creates intense frustration and abandonment.

Tone mismatches damage user relationships subtly. A formal bot responds to casual language with stiff corporate speak. The disconnect feels unnatural and off-putting.

Premature escalation sends users to human agents unnecessarily. The bot gives up too quickly on solvable problems. This wastes both user time and support resources.

Failed error recovery happens when systems cannot handle mistakes gracefully. A single misunderstanding derails the entire conversation. The bot offers no path to correct or clarify.

Understanding these patterns helps you build comprehensive monitoring systems. Each type requires specific metrics and detection methods.

Establishing Baseline Metrics for Detection

You cannot detect anomalies without knowing what normal looks like. Baseline metrics provide the foundation for early warning systems.

Conversation completion rate measures how many interactions reach successful resolution. Track this percentage across different user segments. Declining rates signal emerging conversational UX failure modes.

Average conversation length reveals efficiency trends. Very short conversations might indicate user abandonment. Extremely long exchanges suggest the bot struggles to help users.

Intent confidence scores show how certain the system feels about interpretations. Low confidence appears frequently when users phrase requests in unexpected ways. Monitor these scores across conversation turns.

Fallback frequency counts how often the system admits confusion. High fallback rates indicate gaps in training data or design. This metric highlights areas needing immediate attention.

User satisfaction ratings provide direct feedback on experience quality. Implement quick surveys at conversation endpoints. Track rating trends over time and across different conversation types.

Escalation rate measures how often bots hand off to humans. Rising escalation suggests the system cannot handle common scenarios. This metric ties directly to support costs.

Response time consistency matters for user experience. Delays create anxiety and uncertainty. Sudden spikes in latency often precede other conversational UX failure modes.

Sentiment analysis across conversations reveals emotional patterns. Negative sentiment clusters indicate friction points. This qualitative data complements quantitative metrics.

Establish these baselines during your pilot phase. Update them regularly as your user base and use cases evolve.

Real-Time Monitoring Techniques

Early detection requires continuous observation of system behavior. Real-time monitoring catches conversational UX failure modes as they happen.

Dashboard visualization puts critical metrics at your fingertips. Display conversation completion rates, intent confidence, and escalation trends. Color coding highlights metrics falling outside acceptable ranges.

Automated alerts trigger when thresholds break. Set notifications for sudden drops in completion rates. Flag conversations with multiple consecutive low-confidence turns.

Conversation replay tools let you watch interactions unfold. Observe exactly where users get stuck or frustrated. This qualitative insight complements quantitative data.

Live conversation tracking shows active sessions in real-time. Identify struggling conversations before they end in failure. Your team can intervene or learn from these edge cases.

Pattern recognition algorithms detect recurring failure sequences. Machine learning models identify common paths to conversational UX failure modes. These insights inform proactive improvements.

A/B testing frameworks compare different conversation flows. Measure which variations produce better outcomes. This data-driven approach reduces guesswork in design decisions.

Session recording captures complete interaction histories. Review these recordings during team retrospectives. Identify subtle issues that metrics alone might miss.

Heat mapping shows where users concentrate their inputs. Discover questions the system handles poorly. This guides content development priorities.

Integration with analytics platforms connects conversational data to broader user journeys. Understand how conversation quality affects downstream behavior.

Analyzing User Intent Confusion

Intent confusion represents one of the most common conversational UX failure modes. Users say one thing but the system hears another.

Multi-intent utterances challenge many dialogue systems. A user might ask about pricing and availability simultaneously. Systems trained on single-intent examples struggle with this complexity.

Synonym mismatches occur when users employ unexpected vocabulary. They say “fix” but your training data only includes “repair.” The system fails to connect equivalent terms.

Domain-specific jargon varies across user populations. Industry professionals use technical language. Consumers prefer plain terms for the same concepts. Your system must handle both gracefully.

Ambiguous pronouns create reference problems. “Can you help me with it?” lacks necessary context. The system cannot resolve what “it” means without conversation history.

Implicit requests hide user intent behind indirect language. “It’s cold in here” might mean “increase the temperature.” Literal interpretation misses the actual goal.

Cultural and regional variations introduce unpredictability. The same phrase carries different meanings across geographies. Global systems must account for these nuances.

Track intent confusion through confidence score analysis. Low scores often indicate interpretation uncertainty. Review conversations where confidence drops below 0.7.

Compare predicted intents against user reformulations. When users rephrase immediately, the first interpretation probably missed. This pattern reveals training gaps.

Cluster similar confused intents together. Patterns emerge showing systematic misunderstandings. Address these clusters in your next training iteration.

Test with diverse user groups regularly. Different demographics express needs differently. Your training data should reflect this diversity.

Monitoring Context Retention Issues

Context makes conversations feel natural and efficient. Losing context transforms smooth dialogue into frustrating repetition.

Session state management determines how much the system remembers. Track how many turns the system maintains relevant context. Degradation here causes numerous conversational UX failure modes.

Entity extraction accuracy affects context building. The system must identify and store key information correctly. Names, dates, account numbers, and preferences all matter.

Slot filling completeness measures whether the system gathers necessary information. Incomplete slot filling forces users to repeat themselves. This breaks conversational flow.

Context switching challenges occur in complex interactions. Users jump between topics naturally. Systems must recognize these shifts and adjust accordingly.

Anaphora resolution determines how well bots handle references. “What about the other one?” requires understanding previous mentions. Failed resolution confuses the entire exchange.

Memory decay issues appear in longer conversations. The system might forget earlier turns as the dialogue extends. Users expect consistency throughout the interaction.

Cross-session context presents additional complexity. Returning users want the system to remember previous conversations. Starting fresh every time feels impersonal and inefficient.

Monitor context-dependent accuracy separately from overall performance. A system might handle initial queries well but fail on follow-ups. This disparity indicates context problems.

Review conversations where users explicitly repeat information. “I already told you my account number” signals context loss. These moments deserve immediate attention.

Implement context verification checkpoints in critical flows. Confirm the system retained necessary information before proceeding. This prevents downstream failures.

Test context retention across various conversation lengths. Short exchanges might succeed while longer ones fail. Identify the breaking point for your system.

Identifying Response Quality Problems

Accurate intent recognition means nothing if responses disappoint users. Response quality directly impacts user satisfaction and trust.

Relevance scoring evaluates how well answers match questions. Even correct information presented at the wrong time frustrates users. Context determines relevance as much as accuracy.

Completeness assessment checks whether responses fully address user needs. Partial answers force follow-up questions. Users prefer comprehensive responses in single turns.

Clarity measurements examine how easily users understand responses. Technical jargon confuses general audiences. Overly simple explanations insult sophisticated users.

Consistency verification ensures the system doesn’t contradict itself. Conflicting information across conversations damages credibility. Users lose trust when answers change randomly.

Tone appropriateness matters for relationship building. Formal language feels cold in casual contexts. Overly casual responses seem unprofessional in serious situations.

Actionability determines whether users can act on information provided. Vague guidance leaves users stuck. Specific next steps move conversations toward resolution.

Response timing affects perceived quality. Instant replies to complex questions seem superficial. Reasonable processing time suggests thoughtful answers.

Track user reactions immediately following responses. Do they ask clarifying questions? Do they reformulate their request? These signals indicate response quality issues.

Implement response rating mechanisms at the turn level. Users can mark individual responses as helpful or not. This granular feedback pinpoints quality problems.

Compare responses across similar intents. Inconsistency here reveals quality control gaps. Standardize high-performing responses as templates.

A/B test different response formulations. Measure which versions drive better outcomes. Continuously optimize based on user behavior data.

Detecting Conversation Flow Breakdowns

Smooth conversation flows guide users naturally toward their goals. Breakdowns leave users confused about next steps.

Dead-end detection identifies paths with no clear continuation. The conversation reaches a point where users cannot proceed. This represents a critical conversational UX failure mode.

Loop identification finds repetitive interaction patterns. The system asks the same question across multiple turns. Users circle back to previous states without progress.

Branch complexity analysis examines decision trees. Overly complex flows overwhelm users with choices. Simplified paths often perform better.

Navigation failures occur when users cannot return to previous states. They want to change earlier answers but find no mechanism. Frustration builds rapidly.

Premature termination happens when the system ends conversations too early. Users still need help but the bot considers the task complete. This mismatch creates dissatisfaction.

Unexpected exits show users abandoning conversations abruptly. High exit rates at specific points indicate design problems. These friction points need immediate investigation.

Clarification loops trap users in endless question cycles. The system keeps asking for clarification it cannot use effectively. Users give up after several failed attempts.

Track conversation state transitions carefully. Visualize common paths through your dialogue system. Identify states with high abandonment or backtracking.

Monitor turn-taking patterns for anomalies. Natural conversations alternate between user and system smoothly. Stuttering or one-sided exchanges indicate problems.

Measure time spent in each conversation state. States where users linger unusually long suggest confusion. Quick transitions might indicate the state adds no value.

Test edge cases deliberately in your flows. What happens when users say “no” repeatedly? How does the system handle unexpected inputs at each step?

Leveraging User Feedback Mechanisms

Direct user feedback provides invaluable insights into conversational UX failure modes. Smart collection and analysis turn opinions into actionable improvements.

In-conversation feedback captures sentiment at the moment of experience. Quick thumbs up or down buttons require minimal effort. Users provide honest reactions when friction is fresh.

Post-conversation surveys gather more detailed reflections. Ask specific questions about intent understanding and response quality. Keep surveys short to maximize completion rates.

Free-text feedback reveals issues metrics cannot capture. Users describe problems in their own words. This qualitative data often highlights unexpected failure modes.

Escalation reasons tell you why users abandon bot conversations. Require human agents to categorize handoff reasons. These categories reveal systematic conversational UX failure modes.

Social media monitoring catches public complaints and praise. Users share frustrating bot experiences online readily. These unsolicited insights supplement formal feedback.

Support ticket analysis connects conversation failures to downstream problems. Tickets filed after bot interactions often relate to those conversations. Pattern analysis reveals causation.

Net Promoter Score tracking measures overall satisfaction trends. Declining NPS often correlates with emerging conversation quality issues. This metric provides executive visibility.

Implement feedback prompts strategically throughout conversations. After completing a transaction, ask about the experience. When escalating to humans, ask what the bot missed.

Close the feedback loop publicly when possible. Show users how their input drove improvements. This encourages continued participation in feedback programs.

Segment feedback by user type and conversation category. B2B users have different expectations than consumers. Purchase support differs from general inquiries.

Weight feedback appropriately in decision-making. Vocal minorities sometimes dominate feedback channels. Balance qualitative insights with quantitative usage data.

Building Predictive Failure Detection Models

Reactive detection catches problems as they happen. Predictive models identify conversational UX failure modes before users encounter them.

Historical conversation analysis reveals failure precursors. Certain patterns consistently precede negative outcomes. Machine learning models learn these warning signs.

Feature engineering transforms conversation data into predictive signals. Intent confidence trajectories, entity extraction patterns, and dialogue state sequences all matter. Effective features capture the essence of conversation health.

Training data preparation requires careful labeling. Mark conversations as successful or failed based on multiple criteria. Include partial failures where users achieved goals despite friction.

Model selection depends on your specific use case. Decision trees offer interpretability for understanding failure modes. Neural networks might provide better prediction accuracy.

Validation testing ensures models generalize beyond training data. Split your dataset chronologically rather than randomly. This tests whether models predict future failures accurately.

Threshold tuning balances false positives and false negatives. Too sensitive triggers waste team attention. Too conservative misses critical problems.

Real-time scoring applies models to active conversations. Calculate failure probability as conversations unfold. Trigger interventions when probabilities exceed thresholds.

Feature importance analysis reveals which signals matter most. Understanding drivers helps prioritize monitoring and improvement efforts. This insight guides product development.

Continuous retraining keeps models current as user behavior evolves. Schedule regular updates with fresh conversation data. Monitor model performance metrics carefully.

Ensemble approaches combine multiple models for better accuracy. Different models capture different failure patterns. Aggregated predictions often outperform individual models.

Explainable AI techniques help teams trust and act on predictions. Understanding why a model flags a conversation builds confidence. Transparency enables effective human-in-the-loop systems.

Implementing Automated Testing Protocols

Manual testing cannot cover all potential conversational UX failure modes. Automated protocols provide comprehensive and consistent coverage.

Regression testing verifies that improvements don’t introduce new problems. Test existing conversation flows after every update. Catch breaking changes before users do.

Scenario-based testing simulates real user conversations. Create test cases covering common intents and edge cases. Run these scenarios continuously to validate system behavior.

Utterance variation testing checks robustness to phrasing differences. Generate paraphrases of key user inputs automatically. Ensure the system handles linguistic diversity.

Load testing reveals performance degradation under scale. High traffic volumes expose conversation quality issues. Some conversational UX failure modes only appear under load.

Integration testing validates connections between conversation components. Intent recognition, entity extraction, and response generation must work together. Test the complete pipeline regularly.

Golden dataset comparison measures consistency over time. Maintain a curated set of conversation examples with expected outcomes. Track how system performance changes across versions.

Adversarial testing probes system boundaries deliberately. Input nonsense, contradictions, and edge cases. Discover failure modes before malicious users do.

Multilingual testing ensures quality across all supported languages. Translation quality and cultural appropriateness vary by language. Test each separately and thoroughly.

Cross-platform testing validates experiences across channels. Conversation flows might work differently on web versus mobile. Voice interfaces introduce unique challenges.

Schedule automated tests during every deployment pipeline. Make passing tests a requirement for production releases. This prevents known issues from reaching users.

Document test coverage systematically. Identify gaps where testing lacks depth. Prioritize expanding coverage in high-risk areas.

Review test results regularly as a team. Failed tests indicate potential conversational UX failure modes. Investigate root causes rather than just fixing surface symptoms.

Training Your Team for Failure Detection

Technology alone cannot catch all conversational UX failure modes. Your team needs skills and processes to identify and address problems.

Cross-functional collaboration brings diverse perspectives to problem identification. Engineers see technical issues. Designers notice UX friction. Support teams hear user pain directly.

Regular conversation review sessions build collective understanding. Schedule weekly meetings to examine recent failures. Discuss patterns and potential solutions as a group.

Failure mode taxonomy creates shared vocabulary. Define and document common problem types. This standardization improves communication and tracking.

Escalation protocols ensure urgent issues get immediate attention. Define severity levels and response timeframes. Empower team members to raise critical problems.

Root cause analysis training helps teams look beyond symptoms. Teach methodologies like “5 Whys” to find fundamental issues. Surface solutions prevent recurrence.

User empathy development connects teams to real experiences. Sit team members in support sessions regularly. Direct exposure to user frustration motivates quality improvements.

Metrics literacy ensures everyone interprets data correctly. Train teams to read dashboards and understand statistical significance. Data-driven decisions require data-literate teams.

Documentation standards maintain institutional knowledge. Record decisions, rationales, and outcomes systematically. This prevents repeating past mistakes.

Continuous learning culture encourages experimentation and growth. Share industry research and best practices regularly. Attend conferences and bring insights back to the team.

Failure celebration might seem counterintuitive but proves valuable. Recognize teams that identify and fix critical conversational UX failure modes. This rewards vigilance and problem-solving.

Knowledge sharing sessions let team members teach each other. Deep expertise in specific areas benefits everyone. Build internal expertise rather than depending solely on external consultants.

Case Studies: Early Detection in Action

Real examples illustrate how early detection prevents major conversational UX failure modes. These cases provide practical lessons for your implementation.

A financial services chatbot showed declining completion rates over two weeks. Monitoring caught the 5% drop before users complained publicly. Analysis revealed a recent update broke context retention in multi-step transactions.

The team rolled back the problematic change within hours. They prevented thousands of failed conversations. Detailed testing before redeploying the fix ensured quality.

An e-commerce assistant experienced sudden intent confusion around product searches. Automated alerts flagged unusual confidence score patterns. Investigation found users adopting new slang terms for trending products.

Quick training data updates restored performance within 24 hours. The team established ongoing monitoring of social media language trends. Proactive vocabulary updates now prevent similar issues.

A healthcare bot encountered regulation compliance risks. Conversation review revealed instances of medical advice beyond scope. Early detection through keyword monitoring prevented regulatory violations.

Updated conversation flows added appropriate disclaimers and redirections. The team implemented stricter content guidelines for training data. Compliance testing became part of the deployment pipeline.

A customer support bot suffered from increasing escalation rates. Predictive modeling identified conversations likely to fail. The team implemented proactive handoffs before user frustration peaked.

This reduced negative feedback by 30% while maintaining efficiency. Users appreciated smooth transitions to human agents. The model continues improving with more training data.

A travel booking assistant faced context loss in complex itineraries. Session monitoring revealed memory limits caused the problem. Architecture changes increased context window capacity.

The fix improved completion rates by 25% for multi-leg bookings. Users no longer needed to repeat information. Revenue per conversation increased significantly.

Creating a Culture of Continuous Improvement

Sustainable conversational UX quality requires ongoing commitment. One-time fixes address symptoms rather than building systemic excellence.

Data-driven decision making grounds improvements in evidence. Opinions matter less than user behavior patterns. Establish metrics as the foundation for prioritization.

Iteration cycles should be short and frequent. Small improvements compound over time. Big bang redesigns risk introducing new conversational UX failure modes.

User-centric design keeps real needs at the center. Test assumptions with actual users regularly. Proxy metrics matter less than genuine user satisfaction.

Transparent communication shares learnings across the organization. Conversation quality affects marketing, sales, and product teams. Everyone benefits from understanding failure patterns.

Investment in tooling pays long-term dividends. Good monitoring infrastructure enables faster detection and response. Budget for continuous capability improvements.

Experimentation frameworks make testing ideas safe and systematic. A/B tests validate hypotheses before full deployment. This reduces risk while accelerating learning.

Competitive benchmarking provides external perspective. How do your conversational UX failure modes compare to industry standards? Learn from others’ successes and mistakes.

Long-term roadmapping balances immediate fixes with strategic improvements. Some problems require foundational architecture changes. Plan and execute major upgrades systematically.

Success celebration reinforces positive behaviors. Recognize teams that improve key metrics meaningfully. This motivates continued excellence.

Frequently Asked Questions

What are the most common conversational UX failure modes?

Intent misrecognition tops the list of conversational UX failure modes. Systems misunderstand what users actually want to accomplish. Context loss follows closely as conversations extend beyond a few turns. Response irrelevance frustrates users when answers don’t match questions. Conversation loops trap users in repetitive cycles without progress.

How quickly can I detect conversational UX failure modes?

Real-time monitoring detects issues during active conversations. Automated alerts trigger within seconds of threshold breaches. Predictive models can flag high-risk conversations before they fail. The detection speed depends on your monitoring infrastructure sophistication. Basic metrics dashboards provide daily visibility at minimum.

Do I need machine learning for failure detection?

Machine learning enhances detection but isn’t strictly necessary initially. Simple rule-based monitoring catches many conversational UX failure modes effectively. Track completion rates, intent confidence, and escalation rates manually. Advanced ML models improve prediction accuracy and automation. Start simple and add complexity as your maturity grows.

How many conversations should I review manually?

Review at least 50 conversations weekly across different categories. Sample from successful, failed, and average interactions. Focus review time on edge cases and unusual patterns. Automate metric collection but maintain qualitative review habits. Manual review reveals nuances that metrics cannot capture.

What metrics matter most for early detection?

Completion rate indicates overall conversation success most directly. Intent confidence scores reveal interpretation uncertainty in real-time. Escalation rate measures how often bots need human help. User satisfaction ratings provide direct experience feedback. Average conversation length shows efficiency trends. Monitor all five for comprehensive visibility.

How do I prioritize which failure modes to fix first?

Frequency and severity determine priority logically. High-frequency conversational UX failure modes affect more users. High-severity issues cause greater individual impact. Fix problems that combine high frequency and high severity first. Consider business impact like revenue loss or compliance risk. User feedback intensity also guides prioritization.

Can automated testing catch all conversational UX failure modes?

Automated testing catches known patterns and regression issues reliably. Novel failure modes require human judgment to identify initially. Combine automated protocols with manual conversation review. Update test suites as you discover new problems. Think of testing as complementary to monitoring rather than replacement.

How often should I update my conversational UX baselines?

Review baselines quarterly as user behavior and expectations evolve. Significant product changes require immediate baseline updates. Seasonal patterns might affect some metrics cyclically. Document baseline changes and rationales carefully. Compare current performance against both recent and historical baselines.

What team size do I need for effective failure detection?

Small teams can implement basic monitoring with one dedicated person. Comprehensive programs typically require three to five specialists. Larger organizations might dedicate entire teams to conversation quality. Start with available resources and scale as impact demonstrates value. Cross-functional involvement matters more than absolute team size.

How do I measure the ROI of early failure detection?

Calculate costs avoided from prevented customer churn and support escalations. Measure revenue protected by maintaining conversion rates. Track brand reputation improvements through sentiment analysis. Compare development costs before and after implementing detection. Most organizations see positive ROI within six months of implementation.

Read More:-Customer Journey Prediction Using AI for Growth Teams

Conclusion

Conversational UX failure modes threaten user satisfaction and business outcomes daily. Early detection transforms reactive firefighting into proactive optimization. The strategies outlined here provide a practical roadmap for implementation.

Start with baseline metric establishment across key performance indicators. Implement real-time monitoring dashboards for continuous visibility. Build automated testing protocols that catch regressions before users do. Train your team to recognize patterns and respond effectively.

User feedback mechanisms provide invaluable qualitative insights. Predictive modeling takes detection to the next level of sophistication. These capabilities compound as your monitoring maturity grows.

Remember that perfect conversational experiences remain aspirational. Every system encounters failure modes as use cases expand. Your goal isn’t eliminating all problems but catching them early. Quick detection enables fast response before widespread user impact.

The investment in detection infrastructure pays dividends across multiple dimensions. Customer satisfaction improves when problems get fixed quickly. Support costs decline as automated systems handle more interactions successfully. Development efficiency increases when teams focus on real user needs.

Conversational UX failure modes will evolve as your product and user base grow. Continuous improvement mindset keeps your detection capabilities current. Regularly review and update your monitoring approaches. Learn from each failure to strengthen future prevention.

Your competitive advantage comes from superior execution rather than perfect design. The teams that detect and fix problems fastest win user loyalty. Build your detection capabilities systematically starting today.

The path forward requires commitment but the destination rewards the effort. Users deserve conversational experiences that understand and help them effectively. Early failure detection makes that promise achievable at scale. Your journey toward conversational excellence begins with visibility into what’s breaking and why.