Introduction

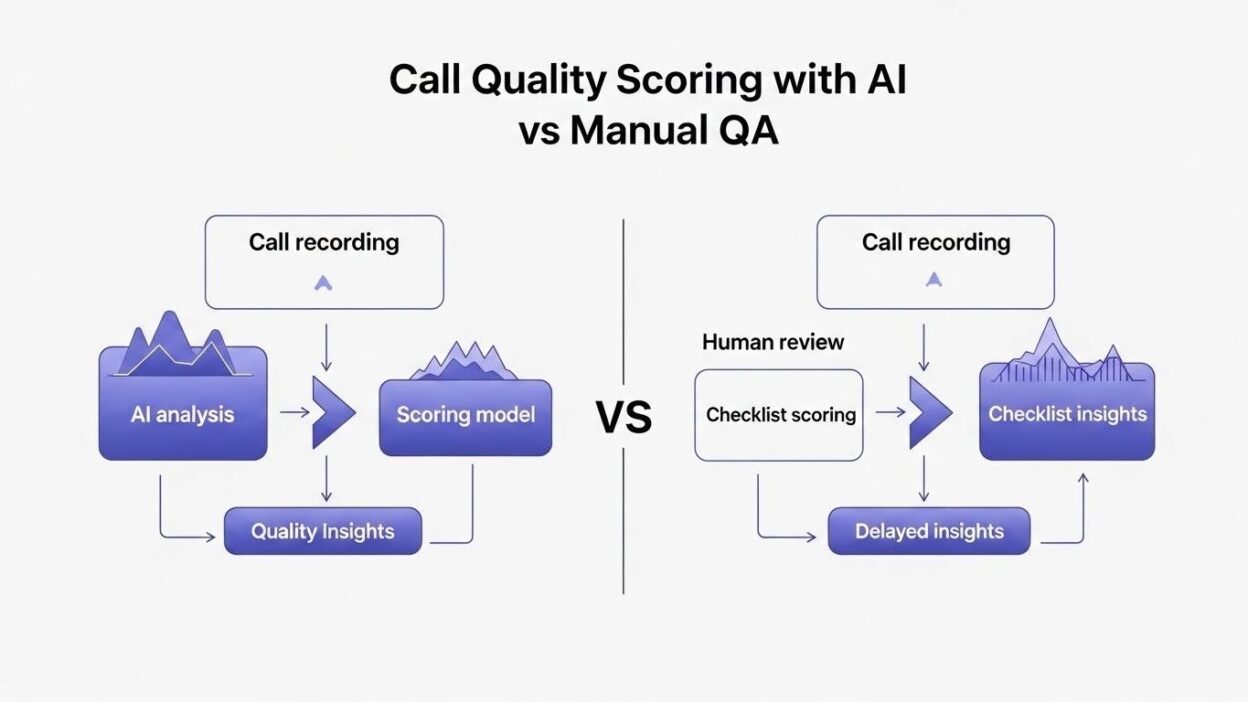

TL;DRYour quality assurance team listens to the same calls repeatedly. They fill out evaluation forms for hours each day. They score maybe 2-3% of your total call volume.

The other 97% of calls remain unexamined. Problems go unnoticed. Training opportunities get missed. Compliance issues slip through undetected.

Manual QA worked fine when contact centers handled hundreds of calls daily. Modern operations process thousands or tens of thousands. The old approach simply cannot scale.

Call quality scoring with AI promises to evaluate every single interaction. The technology analyzes conversations automatically. It identifies patterns humans would never spot manually.

Quality managers face a critical decision. Should you stick with proven manual methods? Should you embrace AI-powered automation? Can both approaches coexist effectively?

This guide examines both methodologies in depth. You’ll understand how each approach works, what benefits they deliver, and which scenarios favor different strategies.

Table of Contents

Understanding Call Quality Scoring with AI

Artificial intelligence evaluates customer service calls automatically without human intervention. Machine learning algorithms analyze audio recordings or transcripts. They assess conversations against predefined quality criteria. Scores generate instantly across your entire call volume.

The technology operates through several sophisticated processes. Speech recognition converts audio to text. Natural language processing extracts meaning from conversations. Sentiment analysis detects emotional tones. Pattern recognition identifies compliance violations or best practice adherence.

Call quality scoring with AI examines multiple dimensions simultaneously. The system checks whether agents followed scripts correctly. It verifies that required disclosures happened. It measures customer satisfaction indicators. It identifies upsell opportunities that agents missed.

Scoring happens at massive scale. An AI system can evaluate 10,000 calls overnight. The same work would take a manual QA team months to complete. Every interaction receives the same rigorous evaluation.

Consistency remains perfect across all evaluations. AI applies identical criteria to every call. Personal bias never influences scores. Mood swings don’t affect judgment. The scoring rubric gets applied uniformly.

Real-time scoring capabilities separate AI from manual approaches. Some systems analyze calls while they’re still happening. Supervisors receive alerts about problematic interactions immediately. They can intervene before calls end badly.

Custom scoring models adapt to your specific business needs. You define what quality means for your organization. The AI learns your priorities and evaluation criteria. It applies your standards automatically to every conversation.

How Manual QA Works in Contact Centers

Quality assurance specialists listen to recorded calls individually. They select a small sample from total call volume. The selection might be random or based on specific criteria.

Evaluators use standardized scorecards during reviews. These forms contain specific questions about call performance. Did the agent greet the customer properly? Did they verify account information? Did they resolve the issue? Each criterion receives a score.

The listening and scoring process takes considerable time. A single call might take 15-20 minutes to evaluate properly. The evaluator needs to listen carefully, take notes, and complete detailed forms. They might replay sections multiple times for accuracy.

Feedback sessions follow the evaluation process. Quality analysts meet with agents to discuss their performance. They play call excerpts to illustrate specific points. They provide coaching on improvement areas.

Calibration sessions ensure consistency among evaluators. Multiple QA specialists listen to the same calls independently. They compare their scores and discuss discrepancies. This process aligns everyone’s interpretation of quality standards.

Sampling strategies determine which calls get reviewed. Some centers use purely random selection. Others target specific call types, agent groups, or time periods. The goal is achieving representative coverage within resource constraints.

Manual scoring typically covers 1-5% of total call volume. A team of five QA analysts might review 500 calls monthly from a total volume of 20,000. The vast majority of interactions never receive formal evaluation.

Documentation and reporting consume additional time. QA teams compile results into dashboards and reports. They identify trends across agents and teams. They prepare presentations for leadership review.

Key Differences Between AI and Manual Approaches

Speed separates these methodologies dramatically. Call quality scoring with AI evaluates calls in seconds. Manual reviews require 15-20 minutes per call minimum. AI processes thousands of calls in the time humans handle dozens.

Coverage rates differ by orders of magnitude. AI can score 100% of your call volume automatically. Manual QA realistically covers 1-5% with significant resource investment. The difference impacts what problems you can detect.

Consistency varies substantially between approaches. AI applies identical criteria to every single call without variation. Human evaluators bring subjective interpretation despite calibration efforts. Personal preferences and moods influence manual scores.

Cost structures look completely different. Manual QA requires ongoing labor costs for evaluator salaries. AI involves upfront licensing and implementation costs but lower ongoing expenses. The break-even point typically comes quickly for high-volume centers.

Insight depth favors different aspects. AI excels at pattern detection across massive datasets. It spots trends invisible to humans reviewing small samples. Manual evaluation captures nuanced context that algorithms might miss.

Real-time capabilities exist only with AI. Manual QA always operates retrospectively on recorded calls. Call quality scoring with AI can analyze conversations while they’re happening. This enables live coaching and intervention.

Adaptability timelines differ significantly. Changing manual QA criteria happens quickly through scorecard updates and evaluator training. Modifying AI scoring models requires retraining algorithms. The process takes more time but ensures consistent application.

Emotional intelligence understanding leans toward human evaluators currently. Experienced QA specialists detect subtle frustration or satisfaction cues. AI sentiment analysis improves constantly but hasn’t matched human nuance yet.

Benefits of Call Quality Scoring with AI

Complete visibility into all customer interactions transforms quality management. You evaluate every call rather than tiny samples. Hidden problems surface immediately. You understand true performance across your entire operation.

Compliance risk drops dramatically with comprehensive monitoring. Regulatory violations get detected automatically. The system flags every instance of missing disclosures or improper procedures. Legal exposure decreases substantially.

Agent coaching becomes data-driven and specific. AI identifies exactly which skills each agent needs to develop. You see patterns across dozens of their calls. Coaching conversations focus on concrete behavioral changes.

Customer experience insights multiply exponentially. You understand what frustrates customers at scale. You identify which agent behaviors correlate with satisfaction. You spot systemic issues in processes or products.

Supervisor productivity increases dramatically. They spend less time randomly sampling calls. They focus coaching energy where it matters most. Alert systems guide them to critical situations.

Onboarding time for new agents decreases. Call quality scoring with AI provides immediate feedback on every call. New hires learn faster with consistent, objective evaluation. They understand expectations clearly through automated scoring.

Cost per evaluation plummets over time. The marginal cost of evaluating one more call approaches zero with AI. Manual evaluation maintains constant per-call costs. Scaling AI is dramatically cheaper than scaling QA teams.

Trending and analytics reach new levels. You track quality metrics by hour, day, campaign, or any dimension. You correlate quality scores with customer outcomes. You predict future performance based on leading indicators.

Advantages of Manual QA Methods

Human judgment captures contextual nuances that AI misses. An experienced evaluator understands when breaking script actually improved the interaction. They recognize creative problem-solving that algorithms might score negatively.

Relationship building between QA and agents strengthens through manual review. Face-to-face coaching sessions create trust and rapport. Agents accept feedback more readily from humans they know and respect.

Complex situations require human interpretation. Some calls involve unique circumstances that don’t fit standard criteria. Manual evaluators apply common sense and flexibility. They make judgment calls that rigid algorithms cannot.

Agent development benefits from personalized coaching. Human coaches tailor their approach to individual learning styles. They provide encouragement and motivation alongside corrective feedback. They build agents up rather than just scoring them down.

Cultural and linguistic subtleties come through in manual review. Evaluators who share language backgrounds with agents catch nuances. They understand regional expressions and communication styles. They apply cultural context to evaluations.

Creativity and innovation get recognized through human evaluation. An agent who finds a brilliant new solution to a common problem deserves recognition. Manual evaluators spot and celebrate these moments. AI might miss them entirely.

Trust in the evaluation process runs higher with manual methods. Agents understand human judgment even when they disagree. Black-box AI scoring can feel arbitrary or unfair. Transparency about criteria helps but doesn’t eliminate skepticism.

Soft skills assessment remains more reliable with human evaluators. Empathy, rapport building, and emotional intelligence are hard to quantify. Experienced QA specialists recognize these qualities intuitively. Algorithms struggle with such subjective dimensions.

Implementation Requirements for AI Scoring

Technology infrastructure forms the foundation. You need cloud computing resources to process large audio files. Storage capacity must handle recordings of every call. Integration capabilities connect AI systems to your contact center platform.

Speech analytics engines convert audio to analyzable text. These systems must handle your specific accent patterns and industry terminology. Accuracy rates matter enormously. Poor transcription leads to inaccurate scoring.

Training data teaches AI systems your quality standards. You provide examples of excellent, good, poor, and terrible calls. The system learns patterns associated with each quality level. More training data produces more accurate models.

Scoring criteria definition requires careful thought. You must articulate quality standards precisely enough for algorithms. Vague concepts like “build rapport” need specific behavioral indicators. The AI needs measurable signals to evaluate.

Integration with existing systems ensures smooth workflows. Call quality scoring with AI should feed results into your WFM platform, LMS, and performance management systems. Agents and supervisors need easy access to scores and insights.

Change management prepares your organization for new approaches. Agents need to understand how AI evaluation works. Supervisors must learn to interpret and act on AI-generated insights. Resistance emerges without proper communication.

Ongoing optimization keeps AI scoring accurate. You continuously compare AI scores against expert human evaluations. You identify discrepancies and retrain models. Quality standards evolve and AI must adapt.

Privacy and security protections safeguard sensitive data. Call recordings contain personal customer information. Your AI system must comply with data protection regulations. Encryption, access controls, and retention policies all matter.

Setting Up Manual QA Programs

Dedicated QA staff provides the human resource foundation. You need skilled evaluators who understand your business and customer service principles. Hiring people with contact center experience accelerates program maturity.

Scorecard development defines what you measure. Effective scorecards balance objective and subjective criteria. They align with business goals and customer expectations. They provide clear definitions for each scoring dimension.

Sample size calculations determine coverage targets. Statistical significance requires minimum sample sizes per agent per period. You balance comprehensive coverage against resource constraints. Most programs aim for 3-5 evaluations per agent monthly.

Calibration processes ensure inter-rater reliability. All evaluators must interpret criteria consistently. Regular calibration sessions compare scores on identical calls. Discrepancies get resolved through discussion and guideline refinement.

Feedback delivery systems close the improvement loop. Quality scores mean nothing without actionable coaching. You establish regular one-on-one sessions between evaluators and agents. Coaching focuses on specific improvement opportunities.

Dispute resolution procedures handle disagreements fairly. Agents sometimes believe scores misrepresent their performance. Clear processes for challenging evaluations maintain trust. Second opinions from senior QA or supervisors resolve conflicts.

Technology tools streamline manual workflows. QA software helps evaluators access calls, complete scorecards, and track trends. Screen recording provides additional context. Speech analytics might highlight sections for focused review.

Performance tracking links QA to agent development. Quality scores integrate into performance reviews and incentive calculations. The connection between scores and consequences motivates improvement. Clear pathways from current to desired performance guide development.

Accuracy and Reliability Comparison

Statistical reliability favors AI approaches significantly. Large sample sizes eliminate randomness in quality assessment. You understand true performance rather than sample-based estimates. Confidence intervals narrow dramatically.

Inter-rater reliability issues disappear with AI. Multiple human evaluators score the same call differently. Calibration reduces but never eliminates this variance. Call quality scoring with AI produces identical scores regardless of when evaluation occurs.

Bias elimination proves easier with algorithms. Human evaluators carry unconscious biases about age, gender, accent, and other factors. These biases influence scores subtly. AI can be designed to ignore protected characteristics entirely.

Edge case handling challenges both approaches differently. Unusual situations confuse AI systems trained on typical interactions. Human evaluators handle novelty better but might lack guidance. Neither approach excels at extremely rare scenarios.

Accuracy measurement requires ground truth data. You need expert consensus on what scores should be. Comparing AI and human scores against this benchmark reveals accuracy. Both approaches show errors but in different patterns.

False positive and false negative rates matter for compliance. AI might flag innocent statements as violations. It might miss subtle problematic language. Manual review catches some issues AI misses and vice versa.

Score stability over time differs between methods. Manual evaluator scoring drifts gradually without regular calibration. AI remains perfectly consistent unless explicitly retrained. This consistency aids long-term trending.

Contextual accuracy challenges AI systems. Sarcasm, humor, and cultural references confuse natural language processing. Human evaluators navigate these complexities naturally. Call quality scoring with AI continues improving but hasn’t achieved human-level context understanding.

Cost Analysis: AI vs Manual QA

Initial investment costs skew toward AI heavily. Licensing fees for enterprise speech analytics platforms run into six figures annually. Implementation services add substantial professional service costs. Integration work requires specialized technical expertise.

Ongoing operational costs favor AI dramatically. Manual QA requires salaries for full-time evaluators. A team of five specialists might cost $300,000 annually. AI subscription costs remain relatively flat as call volume grows.

Scalability economics strongly benefit AI approaches. Doubling call volume requires doubling manual QA staff. AI systems handle increased volume with minimal cost increase. Marginal cost per evaluation approaches zero.

Hidden costs in manual QA include turnover and training. QA evaluator positions experience significant turnover. Recruiting and training replacements costs time and money. Institutional knowledge walks out the door with departures.

Technology costs exist in both approaches. Manual QA uses specialized software for scorecards and reporting. These tools cost tens of thousands annually. AI platforms include similar functionality in their pricing.

Opportunity costs of limited coverage hurt manual approaches. Undetected compliance violations carry enormous potential liability. Missed coaching opportunities slow agent development. The cost of ignorance about 95% of your calls adds up.

ROI timelines differ substantially. Manual QA shows value immediately but scales linearly. Call quality scoring with AI requires upfront investment but scales exponentially. Break-even typically occurs within 12-18 months for mid-sized centers.

Total cost of ownership over three years usually favors AI. Calculate all costs including labor, technology, implementation, and opportunity costs. AI TCO drops dramatically in years two and three. Manual QA maintains steady annual costs.

Integration and Hybrid Approaches

Combined strategies leverage strengths of both methods. AI scores every call automatically. Manual QA evaluates a targeted sample for quality control. This hybrid approach provides comprehensive coverage with human validation.

AI-identified exceptions trigger manual review. The algorithm flags unusual situations or potential errors. Human evaluators examine these cases specifically. This focuses precious human attention where it adds most value.

Calibration uses AI for consistency training. Evaluators score calls that AI also scored. Discrepancies reveal where human judgment drifts from defined standards. This accelerates calibration and improves reliability.

Appeal processes benefit from dual evaluation. An agent disputes their AI score on a particular call. A human evaluator reviews the disputed call manually. This provides fairness while maintaining AI efficiency.

Training development combines AI insights with human creativity. Call quality scoring with AI identifies skill gaps across the agent population. Human trainers design engaging programs to address these gaps. Data informs but humans create learning experiences.

Quality assurance auditing validates AI accuracy. QA specialists regularly review AI-scored calls. They verify algorithms apply criteria correctly. They identify model drift or accuracy degradation. They guide retraining priorities.

Customer experience analysis uses both lenses. AI quantifies satisfaction patterns across thousands of calls. Human researchers explore specific interactions deeply. Together they provide comprehensive understanding.

Performance management incorporates multiple data sources. AI scores contribute to agent evaluations. Manual coaching notes add qualitative context. Supervisors make holistic decisions using all available information.

Common Challenges and Solutions

Agent resistance to AI monitoring creates cultural challenges. People fear being watched constantly by unfeeling algorithms. Address this through transparency about how AI works and what it measures. Emphasize coaching benefits over punitive aspects.

Data quality issues undermine AI accuracy. Poor audio quality produces bad transcriptions. System metadata errors misattribute calls. Invest in data quality monitoring and cleansing. Garbage in guarantees garbage out.

Model bias can emerge in unexpected ways. AI might score certain accents or speech patterns unfairly. Regular fairness audits across demographic groups catch these issues. Adjust training data to eliminate bias.

Privacy concerns arise with comprehensive call recording. Customers and agents both worry about surveillance. Maintain clear policies about data retention and access. Comply rigorously with regulations like GDPR and PCI.

Scorecard complexity confuses both AI and humans. Overcomplicated evaluation criteria produce inconsistent results. Simplify to essential dimensions that clearly link to business outcomes. Less is often more in quality measurement.

Change management difficulties slow adoption. Supervisors accustomed to manual sampling struggle with AI dashboards. Provide comprehensive training on new tools and workflows. Celebrate early wins to build momentum.

Integration technical challenges delay implementations. Legacy contact center systems may lack modern APIs. Budget time and money for middleware and custom development. Choose AI vendors with proven integration experience.

Continuous improvement requires ongoing investment. Call quality scoring with AI isn’t set-and-forget technology. Customer expectations evolve. Business priorities shift. Plan for regular model updates and refinement.

Real-World Use Cases and Results

A major insurance company implemented AI scoring across 2,000 agents. They evaluated 100% of customer calls instead of the previous 2%. Compliance violation detection increased by 400%. They prevented three potential regulatory issues before external audit.

A telecommunications provider combined AI with targeted manual review. AI flagged the lowest-scoring 10% of calls for human coaching. Agent performance scores improved 23% within six months. Customer satisfaction increased by 15 points.

A financial services contact center used AI to personalize coaching. Each agent received specific skill development recommendations based on their call patterns. Training completion rates doubled. Time to proficiency for new hires decreased by 30%.

A healthcare call center deployed real-time AI monitoring. Supervisors received alerts about distressed calls in progress. They intervened immediately in 47 cases during the first month. Patient satisfaction scores increased significantly.

A retail customer service organization replaced manual QA entirely with AI. They redirected QA staff to full-time coaching roles. Agent coaching hours tripled. Quality scores improved while QA costs decreased by 40%.

An outsourced contact center used AI for client reporting. They provided detailed quality metrics on 100% of client calls. Client retention improved by demonstrating superior quality management. They won new business based on comprehensive reporting capabilities.

A B2B sales organization used call quality scoring with AI to identify top performers. They analyzed which behaviors correlated with closed deals. They trained all reps on these winning behaviors. Revenue per rep increased 18%.

A government agency implemented AI for compliance monitoring. They detected policy violations that manual sampling missed. They remediated systemic training gaps. Audit findings decreased by 60% year-over-year.

Future Trends in Quality Scoring

Real-time coaching will transform agent development. AI will provide live guidance during active calls. Agents will receive suggestions for better responses in real time. The system becomes a co-pilot rather than just a judge.

Predictive quality scoring will emerge from advanced analytics. AI will forecast which agents are likely to have quality issues before they occur. Proactive intervention will prevent problems rather than just detect them.

Emotion AI will deepen customer sentiment understanding. Technology will detect frustration, confusion, satisfaction, and other emotions with high accuracy. Quality scoring will incorporate emotional journey mapping.

Video call analysis will extend beyond audio. AI will evaluate facial expressions, body language, and visual engagement. Video quality dimensions will join traditional audio scoring criteria.

Multimodal analysis will combine multiple data streams. The system will consider call audio, screen activity, CRM interactions, and customer history simultaneously. Holistic evaluation will replace narrow call-only assessment.

Automated coaching content generation will personalize development. AI will create custom training modules addressing individual agent weaknesses. Learning paths will adapt dynamically to progress.

Quality score prediction will guide resource allocation. AI will forecast next month’s quality metrics based on current trends. Operations leaders will make proactive staffing and training decisions.

Cross-channel quality consistency will become standard. Call quality scoring with AI will extend to chat, email, and social media. Organizations will maintain unified quality standards across all channels.

Making Your Decision

Assess your current call volume and growth trajectory. Small centers handling hundreds of calls daily might not justify AI investment. Operations processing thousands of daily interactions see clear ROI from automation.

Evaluate your compliance risk profile carefully. Heavily regulated industries with significant potential liability need comprehensive monitoring. Financial services, healthcare, and debt collection should lean toward AI.

Consider your quality assurance team capabilities. Strong existing manual QA programs can enhance rather than replace their work with AI. Struggling QA programs might benefit from complete transformation.

Review your budget constraints and approval processes. AI requires upfront capital investment. Manual QA spreads costs over time through operational budgets. Different organizations have different spending flexibility.

Examine your technical infrastructure readiness. Modern cloud contact centers integrate easily with AI platforms. Legacy on-premise systems require more implementation work. Technical readiness impacts timeline and cost.

Survey your agents about scoring preferences. Some workforces embrace technology eagerly. Others resist change and prefer human interaction. Cultural factors influence implementation success.

Pilot before committing to full deployment. Test call quality scoring with AI on a subset of calls or agents. Compare results against manual evaluation. Measure actual benefits before enterprise rollout.

Plan for evolution rather than revolution. You might start with manual QA, add AI for comprehensive scoring, then optimize the hybrid model. Your approach can mature over time as technology and capabilities develop.

Read More:-Email Outreach Success With AI Sales Assistants

Conclusion

Call quality scoring with AI and manual QA represent two distinct approaches to a critical challenge. Both methods aim to improve agent performance and customer experience. Each brings unique strengths to quality management.

AI delivers unmatched scale, speed, and consistency. It evaluates every interaction without fatigue or bias. It spots patterns across massive datasets. It provides real-time insights that enable immediate action.

Manual QA offers nuanced judgment and relationship building. Human evaluators understand context and complexity. They coach agents with empathy and flexibility. They recognize exceptional performance that breaks conventional patterns.

The choice between these approaches isn’t binary for most organizations. Hybrid models combine AI efficiency with human wisdom. They evaluate everything while preserving judgment where it matters most.

Your specific circumstances determine the optimal approach. Call volume, compliance requirements, budget constraints, and technical capabilities all factor into the decision. No single answer fits every contact center.

Implementation quality matters more than methodology choice. Poorly executed AI scoring disappoints just like inadequate manual QA programs. Success requires commitment, investment, and continuous improvement.

The trend toward AI-powered quality management will accelerate. Technology capabilities improve constantly. Costs decrease over time. More organizations will adopt call quality scoring with AI in coming years.

Customer expectations for service quality keep rising. Your quality management approach must deliver genuine performance improvement. Whether through AI, manual methods, or hybrid strategies, the goal remains the same.

Start evaluating your options today. Research available platforms and vendors. Calculate potential ROI for your situation. Talk with peers about their experiences. The perfect time to improve quality management is right now.

Your agents want to succeed. Your customers deserve excellent service. Quality scoring provides the foundation for both outcomes. Choose the approach that positions your organization for long-term success.