Introduction

TL;DR Human resources teams drown in resumes daily. A single job posting attracts hundreds of applications. Manual screening consumes countless hours. Qualified candidates slip through overworked recruiters’ hands.

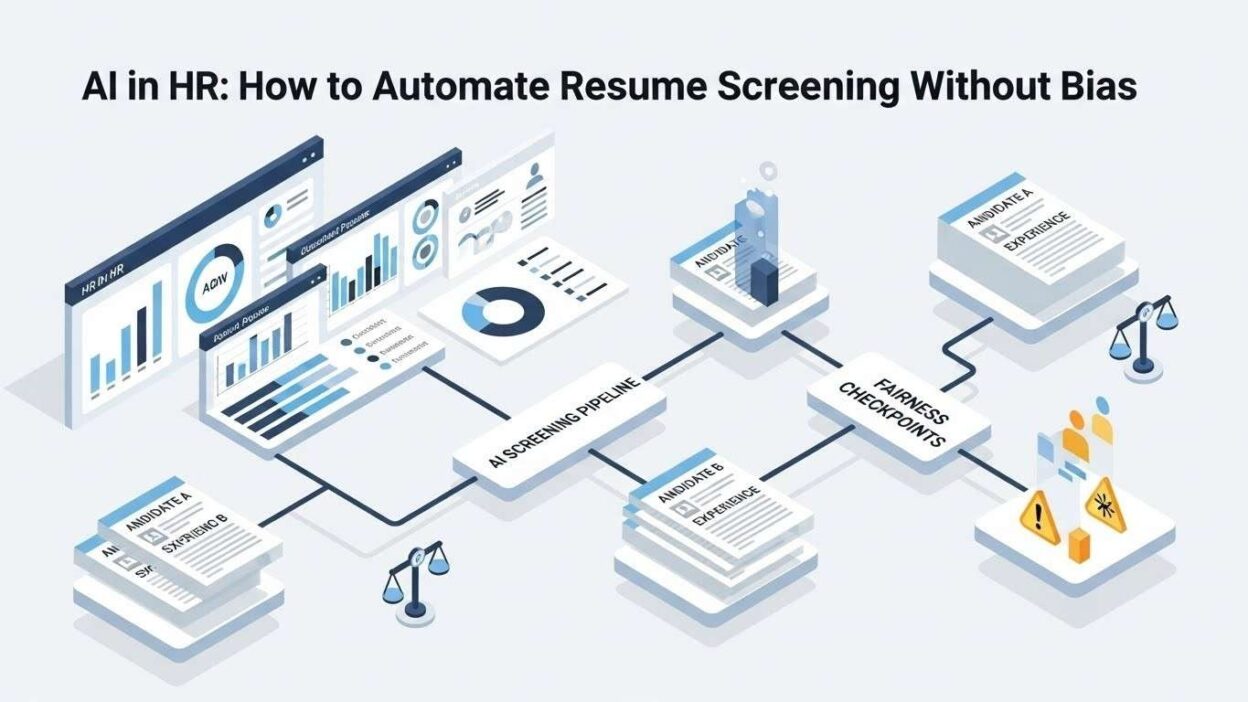

Bias free AI resume screening software promises to solve these problems. Automation speeds up the hiring process dramatically. The technology reviews applications in seconds instead of hours. Your team focuses on interviewing top candidates rather than sorting papers.

Yet automation introduces serious concerns about fairness. Traditional AI systems perpetuate historical biases. Protected characteristics influence decisions inappropriately. Companies face legal liability and reputational damage.

This guide explains how to implement resume screening automation responsibly. You’ll learn to identify bias sources. Practical strategies eliminate unfair advantages and disadvantages. Your hiring becomes both efficient and equitable.

Table of Contents

The Resume Screening Challenge

Talent acquisition teams face overwhelming application volumes. Popular companies receive thousands of resumes weekly. Each position demands thorough candidate evaluation. The math simply doesn’t work manually.

Volume and Time Constraints

Entry-level positions attract massive applicant pools. Marketing coordinator roles receive 500+ applications regularly. Software engineering postings generate similar numbers. Administrative positions flood inboxes constantly.

Recruiters spend 6-8 minutes per resume on average. Multiply this across hundreds of applications. A single job opening consumes days of screening time. Multiple concurrent openings create impossible workloads.

Quality suffers under time pressure inevitably. Recruiters skim rather than read carefully. Fatigue clouds judgment after hours of screening. Great candidates get rejected due to reviewer exhaustion.

Speed requirements conflict with thoroughness. Companies want positions filled quickly. Comprehensive evaluation takes substantial time. The tension creates stress and suboptimal outcomes.

Hidden Bias in Manual Screening

Human reviewers carry unconscious biases. Research demonstrates this reality conclusively. Studies show identical resumes receive different ratings based on applicant names. Ethnic and gender biases influence hiring decisions significantly.

Educational prestige affects evaluations disproportionately. Ivy League degrees receive favorable treatment automatically. State school graduates face uphill battles. Skills and achievements matter less than institutional brand.

Employment gaps trigger negative assumptions. Reviewers imagine worst-case scenarios. Legitimate reasons for gaps get overlooked. Parents returning to work face particular discrimination.

Age bias affects both young and experienced candidates. Recent graduates lack “sufficient experience” somehow. Older workers seem “overqualified” mysteriously. The ideal candidate age range narrows artificially.

Bias free AI resume screening software eliminates these subjective influences. Algorithms evaluate candidates against objective criteria consistently. Every application receives identical consideration. Human prejudices cannot infiltrate the process.

Legal and Compliance Risks

Employment discrimination lawsuits cost companies millions. Proving bias in manual screening proves difficult. Statistical patterns reveal disparate impact. Legal liability increases with every biased decision.

EEOC guidelines require fair hiring practices. Protected classes deserve equal consideration. Age, race, gender, and disability discrimination violate federal law. State regulations often add additional protections.

Diversity initiatives demand measurement and accountability. Companies commit to inclusive hiring publicly. Manual processes make tracking difficult. Demonstrating progress requires systematic approaches.

Documentation requirements strain manual processes. Applicant tracking systems capture basic information. Detailed evaluation rationale often goes unrecorded. Legal discovery exposes inadequate documentation.

Understanding AI Bias Sources

Artificial intelligence learns from historical data. Past hiring decisions train screening algorithms. The system replicates patterns it observes. Bias in training data produces biased AI outputs.

Historical Hiring Pattern Replication

Companies trained AI on successful employee profiles. These employees reflect past hiring decisions. Past hiring contained human biases systematically. The AI learns these biases as “success patterns.”

Tech companies historically hired predominantly male engineers. AI trained on this data favors male candidates. The system penalizes resumes mentioning women’s organizations. Gender bias perpetuates automatically.

Financial services historically preferred certain universities. AI learns these institutional preferences. Graduates from other schools face algorithmic discrimination. Educational diversity decreases rather than increases.

Bias free AI resume screening software requires careful training data curation. Historical decisions need bias auditing. Problematic patterns must get removed. Clean training data produces fairer algorithms.

Proxy Variables and Indirect Discrimination

AI discovers patterns humans miss. Some patterns correlate with protected characteristics. The algorithm uses these proxies unintentionally. Indirect discrimination results from seemingly neutral criteria.

Zip codes correlate with race and ethnicity strongly. AI learns to favor certain geographic areas. The system discriminates based on residence. Fair housing patterns get undermined.

College sports and Greek life memberships reveal gender patterns. AI uses these signals for screening decisions. Women face disadvantages from participation patterns. Indirect gender bias occurs without explicit gender consideration.

Names indicate ethnicity and national origin. AI learns correlations between names and performance. The system ranks candidates differently based on names. Discrimination happens through proxy identification.

Language patterns reveal age and generation. Older candidates use different terminology. AI interprets these differences as capability signals. Age discrimination occurs algorithmically.

Feature Selection Bias

Developers choose which resume elements AI considers. These choices embed assumptions about candidate quality. Some relevant factors get excluded. Others receive undue importance.

Continuous employment history gets weighted heavily. Parents taking career breaks face systematic disadvantages. Military service interruptions harm veterans. Employment gap penalties discriminate indirectly.

Specific technical skills dominate evaluation criteria. Transferable skills receive insufficient consideration. Career changers struggle against narrow definitions. Innovation through diversity hiring decreases.

Keyword matching favors certain communication styles. Candidates using industry jargon score higher. Non-traditional backgrounds lack approved terminology. Cultural fit becomes coded language for homogeneity.

Bias free AI resume screening software examines feature selection critically. Developers audit which factors influence decisions. Discriminatory features get removed or reweighted. Skills and qualifications take priority over proxies.

Building Bias-Free Screening Systems

Creating fair AI requires intentional design choices. Default approaches produce biased outcomes. Specific interventions reduce discrimination systematically.

Diverse Training Data

Representative training data prevents bias amplification. Historical hiring data needs supplementation. Include successful employees from underrepresented groups. Sample sizes must support statistical validity.

Synthetic data generation fills representation gaps. Create artificial resumes matching diverse profiles. Ensure algorithm exposure to varied backgrounds. Lack of historical data shouldn’t perpetuate exclusion.

External benchmarks provide comparison standards. Industry hiring statistics reveal representation levels. Your training data should match or exceed diversity benchmarks. Departures indicate potential bias sources.

Regular training data audits maintain fairness. Analyze data composition quarterly. Identify emerging representation problems. Continuous monitoring prevents bias drift over time.

Blind Screening Techniques

Remove identifying information before AI processing. Names reveal ethnicity and gender. Addresses indicate socioeconomic status. Graduation years expose age.

Bias free AI resume screening software implements redaction automatically. The system strips identifying fields. Only job-relevant information remains. Decisions focus on qualifications exclusively.

Education details get standardized carefully. Institution names shouldn’t drive decisions. Degree type and field matter more than prestige. Equivalency recognition levels the playing field.

Work history anonymization removes company names. Industry and role descriptions provide sufficient context. Prestigious employer bias disappears. Accomplishments speak for themselves.

Skills-Based Evaluation Frameworks

Define required competencies clearly before screening. List specific technical skills needed. Identify relevant soft skills. Prioritize demonstrated abilities over credentials.

Map resume contents to skill requirements systematically. Text analysis extracts relevant capabilities. Achievement descriptions reveal competency levels. Concrete evidence outweighs impressive-sounding titles.

Weight skills according to job importance. Mission-critical capabilities dominate scoring. Nice-to-have skills contribute minimally. Clear prioritization improves candidate-job matching.

Alternative experience recognition expands talent pools. Volunteer work demonstrates skills validly. Personal projects show technical capabilities. Non-traditional paths to competency get credited.

Continuous Bias Testing

Deploy bias detection throughout development. Test algorithm outputs against protected class membership. Statistical disparities indicate problems. Thresholds trigger investigation and correction.

Conduct regular fairness audits post-deployment. Compare pass-through rates across demographic groups. Significant differences demand explanation. Disparate impact analysis guides adjustments.

A/B testing validates bias reduction interventions. Run parallel screening with and without interventions. Measure fairness improvements quantitatively. Data-driven iteration optimizes outcomes.

Bias free AI resume screening software requires ongoing vigilance. Bias creeps in through subtle channels. Continuous monitoring catches problems early. Maintenance prevents fairness degradation.

Implementation Best Practices

Successful deployment requires more than good technology. Organizational practices determine actual fairness. Process design matters as much as algorithms.

Human in the Loop Reviews

AI should assist rather than replace human judgment. Algorithms screen for minimum qualifications. Humans make final selection decisions. This partnership maximizes both efficiency and fairness.

Set appropriate confidence thresholds for automation. High-confidence matches advance automatically. Borderline candidates receive human review. Uncertain cases get extra attention.

Train reviewers on bias awareness. Unconscious bias affects everyone. Regular training maintains sensitivity. Diverse review teams catch different blind spots.

Create structured evaluation rubrics. Standardized criteria reduce subjective interpretation. Consistent scoring improves fairness. Documentation supports defensible decisions.

Transparent Selection Criteria

Communicate screening criteria to candidates clearly. Job postings should list required skills. Evaluation factors deserve explicit statement. Transparency builds trust and enables self-selection.

Provide feedback to rejected candidates. Generic rejections frustrate applicants. Specific improvement areas help candidates develop. Constructive feedback demonstrates respect.

Explain AI’s role in screening honestly. Candidates deserve to know automation involvement. Describe how technology supports fairness. Transparency about methods builds credibility.

Allow candidates to challenge automated decisions. Appeal processes catch algorithmic errors. Human review provides safety nets. Second chances reveal false negatives.

Regular Algorithm Audits

Third-party audits validate fairness claims. External experts bring objectivity. Independent assessment reveals blind spots. Audit reports demonstrate commitment to equity.

Test for disparate impact systematically. Compare selection rates across demographic groups. Statistical significance indicates problems. Four-fifths rule provides practical threshold.

Examine false positive and false negative rates. Different error types affect groups unequally. Error rate equality matters as much as overall accuracy. Fairness requires balanced performance.

Bias free AI resume screening software publishes audit results transparently. Accountability builds stakeholder trust. Honest acknowledgment of limitations maintains credibility. Continuous improvement demonstrates good faith.

Diverse Development Teams

Algorithm designers bring their own biases. Homogeneous teams miss important perspectives. Diverse developers build fairer systems naturally.

Include affected communities in design. Those experiencing discrimination understand impacts deeply. Their insights improve fairness interventions. Participatory design produces better outcomes.

Cross-functional teams catch different issues. Technical experts ensure algorithmic soundness. HR professionals understand hiring realities. Legal advisors identify compliance risks. Candidates provide user perspectives.

Technology Selection Criteria

Many vendors offer AI screening solutions. Quality varies dramatically across products. Careful evaluation protects your organization.

Explainability and Transparency

Understand how algorithms make decisions. Black box systems create accountability problems. Explainable AI enables bias detection. Transparency supports trust and compliance.

Request detailed technical documentation. Vendors should explain their methodology. Training data sources matter enormously. Feature importance rankings reveal priorities.

Test explanation quality before purchasing. Generate sample screening decisions. Request explanations for rankings. Vague explanations indicate weak interpretability.

Bias free AI resume screening software provides clear decision rationale. Recruiters understand why candidates advance or stop. Explanations enable meaningful human oversight. Transparency distinguishes quality products.

Bias Testing and Validation

Demand evidence of fairness testing. Vendors should conduct regular audits. Test results deserve public sharing. Unvalidated fairness claims mean nothing.

Request disaggregated performance metrics. Overall accuracy hides disparate impact. Examine success rates by demographic group. Equal performance across groups indicates fairness.

Conduct your own bias testing. Use representative sample data. Analyze results independently. Vendor claims require verification.

Ask about ongoing monitoring. Fairness requires continuous attention. Algorithms drift over time. Regular revalidation maintains equity.

Customization Capabilities

Your hiring needs differ from other companies. Generic algorithms may not fit. Customization enables organization-specific fairness.

Configure screening criteria to match jobs. Different roles require different skills. Weight factors according to importance. Customization improves candidate-role matching.

Add organization-specific equivalencies. Recognize diverse educational paths. Credit relevant alternative experiences. Customization expands talent pool access.

Adjust for your bias testing results. Correct discovered disparities. Reweight problematic features. Customization enables continuous improvement.

Integration and Usability

Technology must integrate with existing systems. Standalone tools create workflow problems. ATS integration enables seamless adoption.

User interface quality affects usage patterns. Complicated interfaces get circumvented. Intuitive design encourages proper use. Usability directly impacts fairness.

Mobile accessibility supports modern recruiting. Recruiters work from various locations. Responsive design enables flexibility. Access shouldn’t require specific devices.

Bias free AI resume screening software fits your workflow naturally. Adoption happens smoothly without disruption. Ease of use prevents workarounds. Good design supports consistent application.

Measuring Success and Impact

Implementation requires accountability. Metrics demonstrate whether bias reduction works. Transparency about results maintains credibility.

Diversity Metrics

Track applicant pool demographics. Compare against general population. Identify underrepresented groups. Outreach should address gaps.

Measure pass-through rates at each stage. Screen-in rates reveal AI impact. Interview invitation rates show overall pipeline. Offer acceptance rates indicate candidate experience.

Calculate representation in final hires. Compare against applicant pool composition. Improvements demonstrate success. Persistent disparities indicate remaining problems.

Monitor time-to-hire across groups. Longer times for certain demographics suggest bias. Equal treatment produces similar timelines. Speed disparities warrant investigation.

Quality of Hire Indicators

New employee performance validates screening effectiveness. Track productivity and success rates. Analyze retention across demographic groups. Quality hiring benefits everyone equally.

Manager satisfaction surveys reveal hiring quality. Compare satisfaction across team composition. Uneven ratings suggest problems. Consistent satisfaction indicates fair evaluation.

Promotion rates reflect growth potential identification. Screen candidates for development capacity. Track advancement across demographic groups. Equal opportunity produces equitable outcomes.

Bias free AI resume screening software improves overall hiring quality. Better candidate-role matching increases satisfaction. Reduced bias expands talent pool access. Business outcomes improve alongside fairness.

Efficiency Gains

Measure time savings from automation. Compare before and after screening duration. Calculate recruiter productivity improvements. Efficiency justifies technology investment.

Track cost per hire reductions. Faster screening reduces expenses. Better matching decreases turnover costs. ROI calculation validates spending.

Monitor candidate experience metrics. Application completion rates indicate process quality. Time-to-feedback affects candidate satisfaction. Efficiency improvements shouldn’t sacrifice experience.

Legal and Ethical Considerations

Responsible AI screening requires legal compliance. Ethical obligations extend beyond minimum requirements. Both dimensions demand serious attention.

Regulatory Compliance

EEOC guidance addresses automated hiring tools. Employers remain liable for AI decisions. Technology doesn’t eliminate legal obligations. Compliance requires active management.

Disparate impact doctrine applies to AI screening. Neutral policies producing unequal outcomes violate law. Statistical evidence demonstrates discrimination. Intent matters less than impact.

Adverse action notices must explain rejections. Candidates deserve specific reasons. AI decision opacity complicates explanation. Systems must generate defensible rationale.

Record retention requirements apply to automated screening. Maintain applicant data appropriately. Documentation supports compliance defense. Inadequate records create legal vulnerabilities.

Algorithmic Accountability

Someone must answer for AI decisions. Assign clear responsibility within organization. Accountability prevents negligence. Designated oversight ensures attention.

Establish governance processes for AI systems. Regular reviews assess ongoing fairness. Decision authority for corrections needs definition. Process clarity prevents drift.

Create escalation procedures for bias discovery. Rapid response prevents extended harm. Correction protocols enable quick fixes. Speed minimizes impact.

Bias free AI resume screening software includes accountability features. Audit trails track all decisions. Responsibility assignments stay clear. Governance becomes straightforward.

Candidate Rights and Privacy

Inform candidates about AI usage. Transparency respects autonomy. Hidden automation feels deceptive. Honest disclosure builds trust.

Obtain appropriate consent for data processing. Privacy laws require permission. Explain how data gets used. Respect candidate control over information.

Protect candidate data security. Resumes contain sensitive information. Breaches harm individuals significantly. Robust security demonstrates respect.

Honor data deletion requests. Candidates control their information. Compliance shows good faith. Privacy respect attracts quality candidates.

Future of Bias-Free Screening

Technology and practices continue evolving. Emerging developments promise further improvements. Staying current maintains competitive advantage.

Advanced Fairness Techniques

Fairness-aware machine learning advances rapidly. New algorithms optimize explicitly for equity. Multi-objective optimization balances accuracy and fairness. Technical progress enables better outcomes.

Causal inference methods identify true discrimination. Correlation doesn’t prove causation. Sophisticated analysis distinguishes legitimate from illegitimate factors. Better understanding improves interventions.

Counterfactual fairness asks whether changing protected characteristics alters decisions. Decisions should depend only on qualifications. This standard provides strong protection. Implementation complexity continues decreasing.

Continuous Learning Systems

Algorithms improve from feedback continuously. Every hiring outcome teaches the system. Performance tracking enables adaptation. Learning never stops improving accuracy.

Bias free AI resume screening software incorporates ongoing learning. Successful hires inform future screening. Poor matches adjust algorithm priorities. Systems become smarter over time.

Human corrections train AI directly. Recruiter adjustments indicate algorithm errors. Systems learn from these interventions. Collaboration produces continuous improvement.

Holistic Candidate Evaluation

Future systems assess candidates more completely. Skills testing integration validates resume claims. Work samples demonstrate actual capabilities. Multiple data sources improve decisions.

Behavioral assessments predict cultural contribution. Values alignment matters for retention. Scientific instruments reduce subjective judgments. Comprehensive evaluation improves matching.

Video interview analysis supplements screening. Communication skills assessment requires observation. AI analyzes verbal and nonverbal cues. Technology enables richer evaluation.

Read More:-ROI of AI: How Much Time Can a Custom Coding Agent Actually Save Your Team?

Conclusion

Resume screening automation delivers enormous efficiency gains. Manual processes cannot handle modern application volumes. Technology enables faster hiring cycles. Productivity improvements justify investment.

Bias free AI resume screening software makes automation ethical and legal. Careful design prevents discrimination. Intentional interventions reduce unfair disadvantages. Technology can advance rather than hinder equity.

Historical hiring patterns contain systematic biases. AI trained on this data replicates discrimination. Proxy variables enable indirect bias. Feature selection embeds assumptions.

Building fair systems requires specific interventions. Diverse training data prevents bias amplification. Blind screening removes identifying information. Skills-based evaluation prioritizes qualifications. Continuous testing catches emerging problems.

Implementation best practices ensure actual fairness. Human oversight provides accountability. Transparent criteria build trust. Regular audits maintain vigilance. Diverse development teams catch blind spots.

Technology selection demands careful evaluation. Explainability enables bias detection. Validation evidence proves fairness claims. Customization fits your specific needs. Integration supports consistent usage.

Success measurement requires comprehensive metrics. Diversity indicators reveal representation patterns. Quality measures validate screening effectiveness. Efficiency gains demonstrate business value.

Legal compliance demands active management. Regulatory requirements apply to automated decisions. Algorithmic accountability prevents negligence. Candidate rights deserve respect.

The future promises continued improvement. Advanced techniques enhance fairness further. Continuous learning enables adaptation. Holistic evaluation improves matching quality.

Bias free AI resume screening software represents responsible automation. Efficiency and equity advance together. Your hiring becomes both faster and fairer. Technology serves human values appropriately.

Start implementing fair screening systems today. Audit current processes for bias. Select technology carefully. Train teams thoroughly. Monitor results continuously.

Commitment to fairness attracts quality candidates. Diverse teams outperform homogeneous ones. Ethical hiring strengthens employer brand. The business case and moral imperative align.

Your talent acquisition transformation begins now. Bias free AI resume screening software makes excellence accessible. Every candidate deserves fair consideration. Technology helps deliver on this promise.